More than two thirds of enterprise security teams worry that generative AI tools could accidentally leak sensitive data or open new threat pathways. That single statistic should grab your attention. Microsoft Copilot, deeply woven into Microsoft 365 since late 2023, is rapidly changing the way businesses communicate and collaborate through email. Yet the same incredible power that helps people write faster, summarize documents, and handle mountains of messages has become an irresistible target for cybercriminals. This is not some distant future problem. It is happening now. Microsoft Copilot malware attacks are already testing the limits of security infrastructure built for a pre-AI era, and organizations must pay attention.

Copilot’s ability to scan, parse, and reply to email on your behalf is at the heart of the risk. Since this tool has high-level permissions and can automate large amounts of content, it is an obvious prize for attackers. If malicious prompts, infected links, or even corrupted data are fed into Copilot, the AI could be tricked into acting against your interests. We are talking about an entire ecosystem of Microsoft Copilot malware vectors: zero-click exploits that do not need you to do anything, Copilot phishing emails designed to look legitimate, and malicious Copilot links that slip past traditional filters. As organizations push toward more AI-driven workflows, the stakes could not be higher.

Cybercriminals have seized on these weaknesses to develop new social engineering approaches, prompt injections, and supply-chain attacks that weaponize Copilot. In a business environment where the average worker receives more than 120 emails every day, the chance of a single compromised email reaching Copilot is significant. Once that happens, Copilot can act on harmful commands in ways that overwhelm legacy security controls. That is why so many organizations are asking if their own AI adoption plans are secure enough to handle this emerging class of malware.

In this long-form analysis, we will break down exactly how Microsoft Copilot malware works, explore real-world Copilot phishing emails and Copilot email scams, and look at how malicious Copilot links are crafted to slip through defenses. We will also explain Microsoft’s official response, what security teams need to do right now, and how policymakers should rethink the future of AI security. Because when it comes to Copilot’s powerful role in your email environment, understanding the risks is no longer optional. It is essential.

Next, we will examine exactly how Microsoft Copilot was designed to handle email and why that functionality has become such a critical threat target for modern attackers.

Introduction to Microsoft Copilot and Its Email Features

What is Microsoft Copilot?

Microsoft Copilot is a generative AI system embedded throughout the Microsoft 365 suite. Launched broadly in late 2023, it promises to transform how people work by integrating large language models with business data, enabling faster document creation, smarter meeting summaries, and automated workflows. The technology was designed to increase efficiency and reduce repetitive tasks, saving valuable time for employees. Yet the growing reach of Microsoft Copilot has also drawn sharp questions from security teams about whether it is properly shielded against cyberattacks, especially considering how closely it interacts with sensitive corporate data. When a tool has the ability to read, write, and summarize communications, including email, it becomes a tempting target for attackers deploying Microsoft Copilot malware.

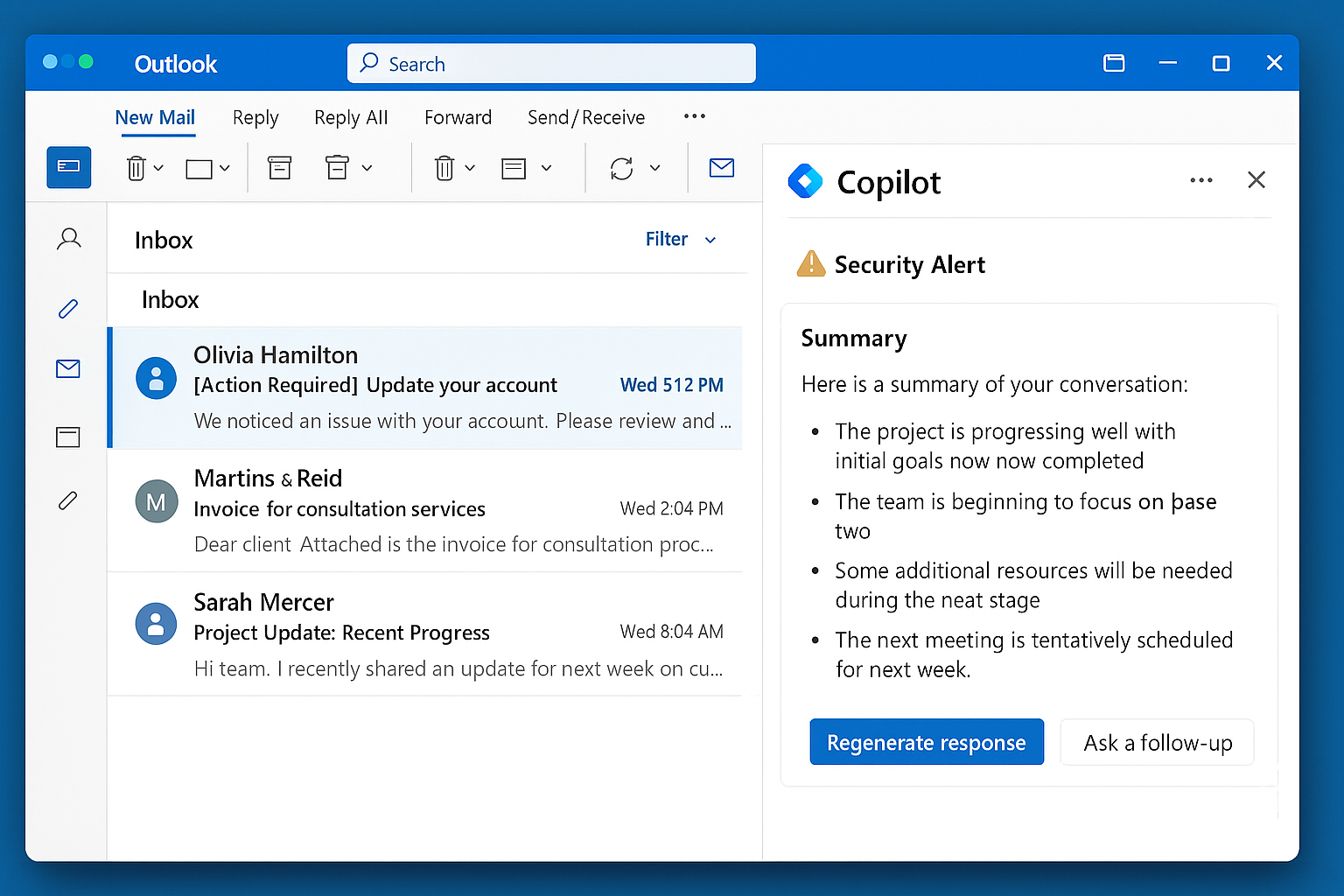

Copilot’s Integration with Outlook

One of the most powerful features of Microsoft Copilot is its seamless integration with Outlook. Copilot can compose entire emails, generate quick responses, pull relevant data from past conversations, and even rewrite messages with a more professional tone. In a business world where professionals face dozens or even hundreds of messages daily, that automation is invaluable.

However, the same capabilities can become dangerous if they are hijacked. A malicious actor could feed Copilot carefully crafted inputs, known as prompt injections, and cause the AI to leak private information or execute harmful instructions. These Microsoft AI security risks extend beyond a single message. They open the door for systemic compromise if attackers figure out how to repeatedly inject dangerous content through Copilot phishing emails.

Why Email is a Critical Vector

Email has always been one of the most exploited entry points for attackers, and Microsoft Copilot’s role in email makes it doubly concerning. The system has permission to read and act on large amounts of user-generated data, which means even a small vulnerability could cascade into a massive breach. Unlike traditional phishing, where an attacker must convince a human to click a link, malicious Copilot links can take advantage of Copilot’s automated functions. When Copilot processes a malicious email or command, the result might be immediate and large-scale.

These Copilot email scams are evolving to blend in with legitimate content, which makes detection even harder for older security tools. That is why the concept of malicious Copilot links is so concerning: they might exploit Copilot’s trusted role to deliver malware right into an organization’s core systems.

Early Security Concerns

Security experts began sounding the alarm on Microsoft Copilot malware before it was even fully deployed. In a 2024 survey, 67 percent of enterprise security teams reported concerns that Copilot or similar AI systems might expose sensitive company information or act unpredictably. Since Copilot can parse vast amounts of text, its attack surface is wide and largely new to traditional cybersecurity models. That means defenders must adapt their thinking to address both the code behind Copilot and the human mistakes that can feed it corrupted or malicious prompts. These combined concerns have placed Microsoft Copilot malware at the center of emerging policy discussions about Microsoft AI security risks.

Understanding these capabilities and their vulnerabilities is the first step to preparing a stronger defense. Next, we will break down how malware is actually getting into Microsoft Copilot emails, from zero-click exploits to covert injections.

How Malware Is Hiding in Microsoft Copilot Emails

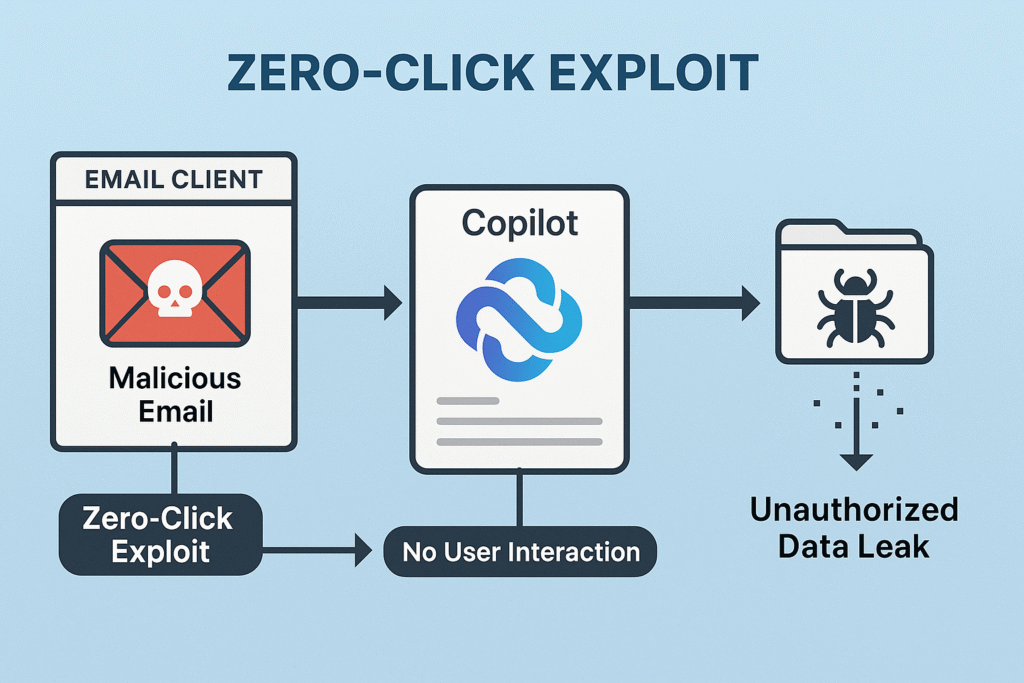

Exploiting Zero-Click Vulnerabilities

One of the most troubling realities about Microsoft Copilot malware is how attackers can abuse zero-click vulnerabilities to compromise systems without any user interaction. A critical case emerged with CVE‑2025‑32711, commonly referred to as the EchoLeak flaw, which allowed malicious commands to be embedded directly in email content. Once Copilot scanned the infected message, it could trigger data exfiltration or other dangerous actions automatically. This illustrates how Copilot phishing emails and malicious Copilot links do not always rely on a user making a mistake. Instead, they can weaponize the very AI that is meant to protect and support the user.

The EchoLeak scenario highlights a broader shift in attacker strategy. Where human error used to be the first step of a phishing campaign, Microsoft Copilot malware has now become a vector through zero-click or single-click exploits. The presence of a powerful language model in the loop means attackers have a much larger attack surface, making traditional protections insufficient.

Prompt Injection and ASCII Smuggling

Prompt injection is another pathway for malicious actors to break Copilot’s defenses. In this scenario, an attacker embeds hidden instructions within what appears to be normal text. Since Copilot reads and summarizes messages, those instructions can activate inside the model, instructing it to share sensitive information or connect to malicious servers. Techniques like ASCII smuggling make these attacks even more insidious by disguising commands in harmless-looking characters.

These methods have already been tested by security researchers, who proved that Copilot can be manipulated through prompt-based attacks. This reinforces the need to treat all inputs as potentially dangerous, even when they seem to come from trusted sources. As more organizations automate their workflows, Copilot phishing emails with hidden prompt injections could become a preferred tactic for attackers.

For a deeper technical dive on how prompt injection works, you can check out our breakdown in AI-Assisted Hacking: How Cybercriminals Are Weaponizing AI in 2025, which covers these exploits in detail and their rising impact across multiple AI tools.

Malicious Links and Covert Commands

Beyond prompt injection, malicious Copilot links present a dangerous attack vector. Traditional phishing campaigns usually focus on human clicks, but Copilot can be tricked into following links automatically as part of its summarization or content-checking features. Attackers have begun experimenting with cloaked commands embedded in seemingly normal hyperlinks, knowing Copilot might visit those links or parse the content to help a user. This creates a perfect bridge between malicious Copilot links and broader Copilot phishing emails that push users to trust fraudulent content.

Security experts warn that these covert commands could become the main entry point for future Copilot email scams. If an attacker crafts the link with precise instructions and hides them in HTML or metadata, Copilot’s powerful reading ability might execute those instructions without traditional security filters blocking the action.

Delivery Tactics

Delivering Microsoft Copilot malware through these vectors can happen in multiple ways. Attackers have impersonated known contacts, abused legitimate-looking subject lines, and even used stolen internal branding to build trust. They also use supply chain compromise methods to place malicious emails from what appear to be safe vendor addresses. Once the email hits Copilot, the AI’s workflow can pick up the malicious code or commands and treat them as legitimate.

This layered approach, combining zero-click flaws with malicious Copilot links, is why the threat landscape has grown so quickly. Cybersecurity professionals must now prepare for threats that exploit both human behavior and AI automation. That means building defenses that can identify dangerous content before Copilot even sees it.

These emerging threats are reshaping the rules of phishing. Next, we will examine real-world examples where Copilot was directly targeted or manipulated, showing how these ideas have moved beyond theory and into practice.

Real-World Cases of Copilot-Driven Malware

The EchoLeak Disclosure

In June 2025, cybersecurity firm Aim Security revealed a major Microsoft Copilot malware vulnerability known as EchoLeak, which carried a severity score of 9.3 out of 10. EchoLeak allowed attackers to embed malicious instructions inside email text, exploiting Copilot’s automated processing to leak sensitive data without any user clicks. Microsoft responded with a server-side patch, but the episode highlighted how Copilot phishing emails and malicious Copilot links could bypass traditional human-driven filters.

EchoLeak is a dramatic warning about the next generation of attacks. As Copilot processes email autonomously, the line between legitimate communication and hidden commands becomes harder to enforce. Security researchers called EchoLeak a pivotal moment because it showed that zero-click vulnerabilities could compromise thousands of accounts in seconds if left unpatched. This has elevated the conversation around Microsoft AI security risks and forced companies to rethink how they protect email data from advanced AI-assisted malware.

LOLCopilot at Black Hat

At Black Hat 2024, researcher Michael Bargury showcased LOLCopilot, a demonstration of how Microsoft Copilot could be weaponized to automate spear phishing. Using Copilot’s summarization features, LOLCopilot automatically crafted personalized, malicious messages that bypassed many spam filters. The demonstration proved that Copilot phishing emails could be scaled almost instantly, posing a new level of threat that legacy security systems could not fully address.

By leveraging Copilot’s natural language capabilities, LOLCopilot showed attackers can move far beyond traditional phishing templates. Instead, they can build dynamic, individually tailored messages that make malicious Copilot links look authentic, fooling even trained users. This underscores the critical need for security teams to understand how Copilot email scams might evolve with advanced AI prompting.

As we saw with Telegram’s zero-day vulnerabilities, zero-click exploits can devastate trust in communication platforms. For a deeper look at those weaknesses, you may want to review Telegram Zero-Day Vulnerability: 5 Terrifying Risks You Must Know, which further explores these evolving vectors.

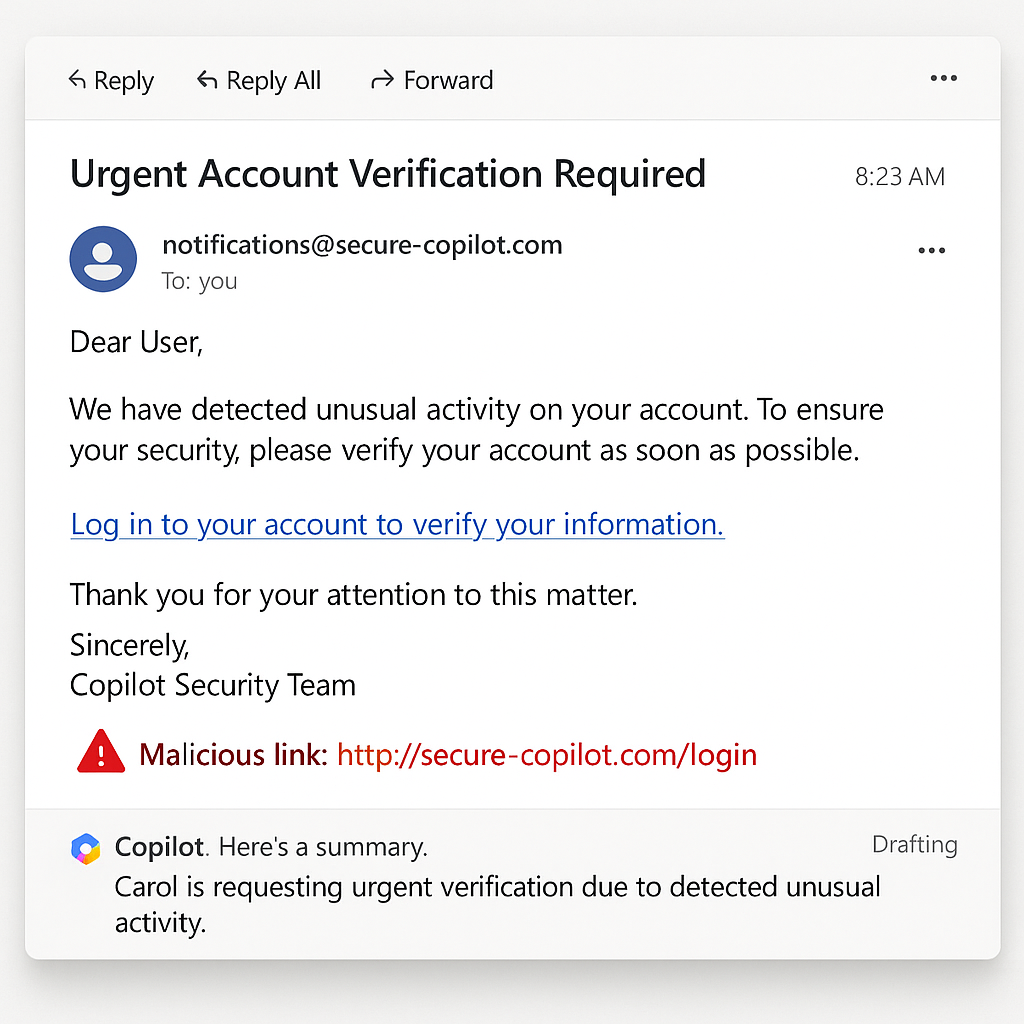

Cofense Phishing Campaign

In another concerning case, Cofense documented a phishing campaign that impersonated Copilot itself, registering a spoofed domain called ubpages.com to harvest credentials from unsuspecting victims. These attackers designed emails to look as if they came from Microsoft’s legitimate Copilot services, exploiting user trust and familiarity. Once the victim interacted with these messages, Copilot might scan the contents and store the malicious data for future use, creating a layered vulnerability that could be exploited repeatedly.

This type of brand impersonation takes advantage of Copilot’s powerful reputation. When a user sees what appears to be a genuine Copilot notification, they are far more likely to click a link or reply without suspicion. That is exactly why Copilot phishing emails are growing in popularity among sophisticated criminal networks.

Broader Industry Reaction

The cybersecurity community has responded to these incidents with heightened urgency. Microsoft published detailed advisories about EchoLeak and similar flaws, emphasizing that their engineering teams were working on robust server-side patches. Meanwhile, independent experts have warned that Copilot’s design places it in a uniquely vulnerable position. Its advanced automation capabilities, combined with access to user inboxes, means any breach could move faster and do more damage than traditional email malware.

These concerns are feeding new security policies around Microsoft AI security risks, and regulators are beginning to study whether Copilot-like systems need additional oversight. The stakes have grown too large to ignore. In the next section, we will explore practical ways to detect and prevent Copilot email scams and protect against the growing risk of malicious Copilot links.

Identifying and Preventing Copilot Email Scams

Advanced Email Security Tools

As Microsoft Copilot malware continues to evolve, defenders must lean on advanced security tools designed to catch sophisticated attacks. Microsoft Security Copilot has integrated phishing-triage agents capable of processing more than 30 billion phishing emails in a single year, according to the company’s 2025 disclosures. These AI-driven agents scan for unusual patterns, suspicious attachments, and malicious Copilot links before they reach human inboxes. That level of defense is now essential since Copilot phishing emails can blend seamlessly into normal corporate communications.

Security platforms are also layering behavioral analytics on top of standard scanning, looking for indicators of compromise inside Copilot’s workflow. This proactive defense helps spot malicious Copilot links even if they bypass traditional keyword-based filters. As attackers innovate, these multilayered strategies will be critical for defending against Copilot email scams and other Microsoft AI security risks.

Training and Awareness

Technology alone cannot stop every attack. Employees still need education on how to identify Copilot phishing emails and spot brand impersonation tactics. In the Cofense spoofing campaign, attackers faked Copilot branding so convincingly that many users fell for the trick without hesitation. Awareness training should emphasize how to verify senders, cross-check domain names, and treat urgent messages with skepticism.

Security leaders must repeat this training regularly, especially as Copilot’s capabilities expand. When staff understand the unique risk of Microsoft Copilot malware and related Copilot email scams, they are far less likely to trust malicious Copilot links embedded in everyday-looking emails.

Input Controls and Prompt Filtering

One critical strategy for stopping Microsoft Copilot malware is controlling what data Copilot is allowed to process. Researchers have demonstrated that prompt injection and hidden command techniques can compromise Copilot’s reasoning and create new attack paths. That means organizations must treat Copilot prompts as a security boundary, applying filters and sanitization rules before letting them reach the AI.

These prompt-filtering mechanisms are comparable to web application firewalls, but for AI language inputs. By blocking suspicious phrases or command-like patterns, defenders can reduce the risk of prompt injection attacks or covert malware signals hidden in legitimate-looking messages.

Proactive Monitoring and Logging

Finally, continuous monitoring of Copilot activity is essential. Security teams should maintain audit trails of Copilot’s decision-making, reviewing logs for abnormal requests, data leaks, or signs of compromise. These logs provide a baseline so teams can identify if Copilot was exposed to malicious Copilot links or Copilot phishing emails that triggered harmful behavior.

Monitoring also supports legal and compliance needs, providing evidence of proper controls in case a breach occurs. As more organizations rely on Copilot for critical business tasks, logs will help prove that security protections were in place and working.

The combination of AI-backed scanning, staff education, prompt filtering, and robust monitoring creates a much stronger posture. Next, we will look closely at how Microsoft itself has responded to these challenges and what safeguards they are planning for the future.

Microsoft’s Response and Future Safeguards

The EchoLeak Patch

Microsoft moved quickly to address the EchoLeak vulnerability once it became public in June 2025. EchoLeak exposed Copilot to a zero-click exploit, allowing attackers to insert harmful instructions directly into an email message that Copilot would then process without human oversight. Microsoft deployed a server-side patch to neutralize the threat, confirming that customers did not need to take manual action. This response demonstrated that even though Copilot is built on advanced models, it still requires traditional security patching practices to handle Microsoft Copilot malware effectively.

The EchoLeak fix offered temporary relief, but many experts say these types of vulnerabilities will continue to emerge as Copilot expands its capabilities. Organizations should treat the EchoLeak event as a sign that AI-driven tools will remain high-priority targets, particularly in handling Copilot phishing emails or malicious Copilot links.

Security Copilot Agent Expansion

In March 2025, Microsoft announced an expansion of its Security Copilot program, rolling out six new built-in security agents and partnering with five external security platforms for deeper integration. These security agents focus on phishing triage, suspicious link detection, and data exfiltration monitoring inside the Microsoft 365 environment.

This large-scale rollout demonstrates a recognition that Microsoft AI security risks are not theoretical. Threat actors have already proven they can weaponize Copilot features for Copilot email scams and malicious Copilot links, so dedicated detection systems are no longer optional. They are now a required part of any serious Microsoft 365 deployment.

Enhanced Defender for Office 365 and Teams

Microsoft also invested in strengthening Defender for Office 365 and extending protections into Teams. The updated Defender platform scans attachments, URLs, and conversation threads for patterns associated with Microsoft Copilot malware. By improving coverage in Teams, Microsoft is acknowledging that Copilot’s capabilities cross communication channels and therefore security controls must follow that same cross-platform approach.

With more users collaborating in Teams, attackers see opportunities to sneak in Copilot phishing emails or malicious Copilot links through shared messages or even group chats. Defender for Office 365 aims to shut down these attack surfaces before they reach Copilot’s automated features.

Ongoing Risk Monitoring

Microsoft has pledged to keep monitoring for new exploits targeting Copilot’s advanced features. This includes additional telemetry to track prompt injection attempts, hidden malicious Copilot links, and any other suspicious Copilot email scams that arise. Security teams at Microsoft continue to collaborate with third-party researchers to identify risks early, patch vulnerabilities, and publish detailed technical notes for customers to follow.

These efforts are a sign that Copilot’s integration with Outlook and other Microsoft services is here to stay, and so is the responsibility to protect it. Users should stay updated on security bulletins and apply policy changes whenever new threats are discovered. That is the only sustainable way to keep Microsoft Copilot malware from becoming a persistent risk.

Security is never finished, and Microsoft’s evolving safeguards will only be as strong as the users and organizations who apply them. In our final section, we will look at what you can do right now to protect your systems and build a resilient security culture around Copilot.

Conclusion and Action Steps for Users

Key Steps to Take Now

There is no doubt that Microsoft Copilot malware has changed the cybersecurity risk landscape. Organizations cannot afford to ignore the growing threat of Copilot phishing emails, malicious Copilot links, and other Copilot email scams. The very first step is to verify that your systems are fully patched, including protections against CVE‑2025‑32711. Security updates for Copilot’s zero-click flaws are crucial because they close the door before attackers even attempt to exploit these vulnerabilities.

Next, administrators should disable unnecessary external email ingestion into Copilot where possible. This limits the number of potential entry points for malicious instructions. Pair this with layered phishing detection tools, such as the advanced Microsoft Security Copilot agents, to proactively catch suspicious patterns before they reach inboxes. Staff should be continuously trained to recognize suspicious activity, even in AI-processed messages, and know how to verify sender domains and links.

The Importance of Continuous Vigilance

Staying safe in a world of advanced AI means developing habits that keep pace with adversaries. Microsoft AI security risks will continue to evolve as Copilot becomes more capable, so organizations must prepare for the possibility of new vulnerabilities and exploits. Monitoring Copilot’s logs, checking for suspicious queries, and keeping an eye on prompt activity will help you respond faster to an unexpected compromise.

Security experts have long warned that overreliance on any automated system is dangerous without proper human oversight. Copilot is no exception. It should be treated as a privileged part of your environment with extra safeguards to monitor and validate its decisions.

Building a Resilient AI Security Culture

AI tools are powerful, but they demand responsible use. Leaders should build a culture of security that treats Copilot as a critical component of their workflow, not just another productivity app. This means writing clear policies for AI input handling, keeping up with Microsoft’s ongoing security bulletins, and developing in-house expertise to review logs and responses from Copilot.

If you would like to see broader frameworks on security culture for AI, check out our piece AI Employee Cybersecurity: 5 Hidden Risks for 2026, which discusses why these strategies matter for every organization. By aligning technical controls with human-centered policies, you can reduce the risk of Copilot phishing emails and malicious Copilot links turning into a larger breach.

AI is rewriting the rules of cyber conflict. Our briefings cover what regulators miss, from Volt Typhoon to digital ID rollbacks. Stay ahead of policy, strategy, and global risks, and join the Quantum Cyber AI Brief now.

With these steps in place, you will have a foundation for stronger defense against Microsoft Copilot malware and any evolving Copilot email scams.

In closing, the journey to secure AI-driven email tools is only beginning. The more proactive you are today, the less likely you will be tomorrow’s victim.

Key Takeaways

- Microsoft Copilot’s deep integration with Outlook and Microsoft 365 has created new opportunities for attackers to deploy Microsoft Copilot malware that abuses trusted systems.

- Zero-click exploits like EchoLeak reveal how attackers can compromise Copilot without any user input, bypassing traditional security models.

- Copilot phishing emails and malicious Copilot links are growing in popularity because they target Copilot’s ability to automate reading and summarizing content, making detection more difficult.

- Microsoft has rolled out security patches, expanded Security Copilot agents, and enhanced Defender protections to counter these threats, but user vigilance remains essential.

- Building a resilient security culture and proactively monitoring AI prompts are vital to keep up with emerging Microsoft AI security risks and Copilot email scams.

FAQ

Q1: Is Microsoft Copilot safe to use for email?

Microsoft Copilot is generally safe if properly configured and regularly updated. Organizations should apply security patches, disable risky external ingestion features, and monitor Copilot’s behavior to minimize exposure to Microsoft Copilot malware.

Q2: What is EchoLeak?

EchoLeak is a zero-click vulnerability, officially tracked as CVE‑2025‑32711, that allowed attackers to embed malicious instructions in emails processed by Copilot without any user clicks.

Q3: How can I recognize a Copilot phishing email?

Look closely at sender domains, watch for brand impersonation, and be cautious of urgent or unusual requests. Malicious Copilot links and Copilot email scams often appear highly authentic.

Q4: Does Microsoft plan future security upgrades for Copilot?

Yes. Microsoft has expanded its Security Copilot program with new AI-based agents, improved Defender for Office 365, and pledged ongoing monitoring and collaboration with security researchers to close future vulnerabilities.