In April 2025, Meta launched Llama 4, its latest large language model, and simultaneously announced a major shift away from “woke” AI development and internal DEI (Diversity, Equity, and Inclusion) goals. The timing couldn’t have been more symbolic: just as Meta unveiled a new commitment to what it calls “viewpoint diversity,” several top AI researchers were walking out the door, and European regulators were preparing legal complaints over Meta’s use of consumer data to train its AI models.

This wasn’t a quiet technical update. Rather, it was a calculated repositioning that has sparked a fast-moving Meta AI controversy. The company’s new AI alignment strategy seeks to counter what some critics have labeled as “left-leaning bias” in generative AI systems. Llama 4 was pitched as offering more “neutral” responses on polarizing issues, aiming to restore balance to a space Meta claims had become skewed by progressive orthodoxy.

But neutrality is a fraught concept in artificial intelligence, particularly when wielded by a platform as politically powerful and historically polarizing as Facebook’s parent company. What Meta frames as balance, critics frame as appeasement. And beneath the surface of corporate PR lies a complicated trade-off: by stepping back from ethical commitments like DEI and embracing a new branding strategy rooted in the anti-woke tech backlash, Meta may have opened itself up to a range of new vulnerabilities.

This post explores the roots of the Meta AI controversy, examining why the company abandoned DEI principles in favor of “serving everyone,” how this decision plays into current Facebook AI politics, and why it may ultimately hurt Meta more than it helps. We’ll walk through five distinct failure scenarios, from regulatory crackdowns to internal talent loss, and explain what the broader industry can learn from this unfolding AI ethics debate in 2025.

To get weekly breakdowns like this on AI policy, surveillance threats, and cybersecurity shifts, subscribe to our newsletter here. Now, let’s unpack exactly what Meta is doing, and why it could backfire.

What Is Meta’s “Anti-Woke” AI Strategy?

Launch of Llama 4 and Shift in Tone

Meta’s April 2025 release of Llama 4 wasn’t just a technical upgrade, it was a rhetorical pivot. Marketed as a model that “removes bias” and enables balanced responses to controversial topics, Llama 4 reflects Meta’s embrace of “viewpoint diversity” as a core design principle. This sets it apart from many previous large language models, which aimed to avoid misinformation, hate speech, or marginalizing language by embedding progressive-leaning ethical filters. The Meta AI controversy has largely centered on this shift.

By contrast, Llama 4 was openly pitched as a model that will not avoid political nuance, even if it means giving credence to ideas typically flagged by older AI guardrails. Axios described the launch as an “AI bias fight” in which Meta consciously moved away from default progressive content moderation models to embrace a more libertarian ethos around speech and access. This approach directly addresses concerns about Facebook AI politics.

Internal Abandonment of DEI Goals

This strategic shift was not limited to the product level. Internally, Meta executives issued a memo to employees in early 2025 announcing the rollback of the company’s DEI programs. The justification was revealing: rather than aiming to uplift marginalized voices, Meta would now focus on “serving everyone,” a phrase designed to reframe equity-focused work as exclusionary. This internal change further fueled the Meta AI controversy.

The memo was shared widely within the tech world and confirmed by multiple independent accounts. Critics interpreted it as part of a broader trend of corporate retreat from race- and gender-conscious policy, especially in politically volatile industries like AI. This abandonment of DEI goals directly impacts the Meta AI bias discussion.

Appeasement of Anti-Woke Critics

Meta’s pivot didn’t emerge in a vacuum. In recent years, the company has come under growing pressure from conservative lawmakers, media outlets, and advocacy groups who argue that AI systems reflect a “left-wing” worldview. These groups have demanded greater transparency and ideological balance from AI platforms, particularly when those platforms are owned by companies already under fire for content moderation and algorithmic bias. The anti-woke tech backlash heavily influenced this decision.

Rather than resisting this pressure, Meta appears to have embraced it. Llama 4 is seen by many as an olive branch to critics who believe tech giants like Facebook have historically suppressed conservative viewpoints. As Axios noted, this move “raises eyebrows” across the industry because it aligns with a larger anti-woke tech backlash, potentially at the cost of established ethical AI principles. This directly impacts the AI ethics debate in 2025.

This embrace of ideological balancing may score Meta points in the short term. But it also sets the stage for longer-term instability, as we’ll explore in the next section. The Facebook AI politics are clearly at play.

Why Meta Is Betting on “Anti-Woke” Branding

Understanding Meta’s Rationale: From Fairness to Market Risk

While critics have described Meta’s pivot as capitulation to political pressure, the company may view it as a necessary recalibration to ensure broad-based trust in its AI systems. Internally, the argument likely rests on two pillars: perceived fairness and risk mitigation. This Meta AI controversy is multi-faceted.

From a fairness standpoint, Meta executives have long fielded criticism that AI content moderation and generative models reflect a narrow ideological lens, one that leans progressive, particularly on issues of race, gender, and identity. In this view, retraining Llama 4 to present more ideologically “balanced” outputs could be framed not as a political concession, but as an attempt to increase representational diversity across viewpoints. This aligns with a broader corporate goal: to build systems that reflect the full spectrum of Meta’s global user base. This speaks directly to the Meta AI bias discussion.

The second rationale is market risk. In the U.S., anti-DEI sentiment has become a lightning rod, with state governments, activist investors, and media outlets targeting companies seen as too politically “woke.” For a company under constant regulatory and political scrutiny, Meta may have calculated that the reputational and legal risks of maintaining explicit DEI filters outweighed the benefits. The anti-woke tech backlash is a significant factor.

Seen this way, the Llama 4 release and the end of DEI programs represent a strategic hedge: not an ideological endorsement, but a structural response to shifting political realities. This is a key aspect of Facebook AI politics.

Reframing Neutrality to Appeal to Broader Markets

Meta’s strategic shift is not just technical, it’s deeply political. By publicly repositioning itself as an arbiter of “viewpoint diversity,” the company is responding to a growing cultural and consumer divide over what AI systems should and shouldn’t say. The Meta AI controversy rests heavily on this reframing of neutrality: what once meant centering ethical safeguards is now being sold as removing them altogether.

This shift mirrors a broader trend in corporate America: so-called “DEI fatigue.” Across industries, companies are scaling back their diversity, equity, and inclusion initiatives, citing backlash, budget cuts, or public skepticism. In this context, Meta’s move is both opportunistic and defensive. By walking away from explicit DEI programming, the company positions itself as above the fray, serving “everyone,” as its memo put it, rather than favoring any specific group. This relates to the ongoing AI ethics debate in 2025.

But critics argue this branding amounts to ideological laundering. By abandoning equity-centered policies under the guise of neutrality, Meta appears to be conceding to pressure from anti-DEI factions while reaping PR benefits from appearing inclusive. This is central to understanding Meta AI bias.

Political Climate and Strategic Alignment

The timing of Meta’s rebrand is no coincidence. Anti-DEI sentiment has surged in U.S. political circles, with several state legislatures rolling back affirmative action laws and high-profile lawsuits attacking race-conscious hiring and admissions. These movements have given new momentum to a broader anti-woke tech backlash, one that views AI ethics as a proxy for liberal overreach.

Meta’s shift happens just as U.S. political institutions, from Congress to the courts, are aligning with this new ideological wave. By pivoting early, Meta gains political cover. It signals to regulators and lawmakers, especially those hostile to Silicon Valley, that it is listening and adjusting. In doing so, the company reduces its profile as a target while aligning itself with a power structure that appears to be gaining traction in 2025. This move is deeply embedded in Facebook AI politics.

This strategy is not without precedent. Tech firms often recalibrate public messaging to anticipate regulatory shifts. But in Meta’s case, the recalibration touches on core debates about Facebook AI politics and the limits of ethical alignment in AI development. This also impacts the AI ethics debate in 2025.

Influence of Executive Culture and Market Messaging

Meta’s leadership, long criticized for opaque decision-making and controversial policy pivots, is once again shaping the company’s public posture around internal ideology. CEO Mark Zuckerberg and his executive team have faced years of scrutiny over content moderation, algorithmic amplification, and election interference. The Meta AI bias debate allows them to reframe those critiques within a new, more favorable context: that of trying to restore balance in a polarized information environment.

This reframing strategy is visible in both the tone and language of Meta’s recent announcements. Rather than defending past decisions, executives are offering a fresh narrative: that AI should empower users by presenting multiple perspectives, not dictate a single ethical standard. In this version of events, Meta isn’t retreating from values, it’s elevating choice. The Meta AI controversy highlights this narrative shift.

That narrative, however, is difficult to maintain when the company’s most significant AI actions involve dismantling programs once seen as core to its ethical commitments. In short, Meta’s new branding attempts to resolve a years-long identity crisis by embracing ambiguity. Whether that approach holds up under scrutiny is another matter.

We cover stories like this in our newsletter, subscribe here to get weekly briefings on AI, surveillance, tech policy, and platform power dynamics.

The Hidden Risks of Politicizing AI

Erosion of User Trust

One of the most immediate dangers of the Meta AI controversy is the erosion of user trust. While Meta frames its new approach as offering ideological balance, many users see it as a politically motivated redesign that makes the platform less reliable. When AI outputs are shaped by shifting political winds rather than consistent ethical principles, credibility suffers.

This is not just theoretical. A study published by PsyPost in 2024 found that people who disclose their use of AI are actually perceived as less trustworthy than those who don’t. In experiments, participants rated advice and responses attributed to AI as less credible, even when the content was identical to human-generated output. In the context of Facebook AI politics, where algorithmic trust is already fragile, this kind of perception drop could compound reputational damage. This directly impacts the Meta AI bias discussion.

When users start seeing Meta’s AI outputs as ideologically filtered, regardless of direction, public trust may decline across the board. A system perceived as “anti-woke” is, by default, no longer neutral in the eyes of many users. This leaves Meta vulnerable to skepticism from both the political left and center, with no guarantee of loyalty from the right. This is a crucial element of the AI ethics debate in 2025.

Damage to Perceived Objectivity

The deeper issue is not that Meta is making changes, it’s that the changes are being justified with a rhetoric of objectivity that can’t withstand scrutiny. In the context of the broader AI ethics debate in 2025, objectivity isn’t simply about offering two sides of a story; it’s about how outputs are chosen, how language is framed, and whose perspectives are prioritized.

By abandoning DEI filters and shifting toward so-called “balanced” outputs, Meta risks giving legitimacy to views that were previously flagged for being misleading, discriminatory, or conspiratorial. That doesn’t just affect the model, it affects how users perceive Meta’s role in shaping discourse. And that perception, once lost, is hard to rebuild. This is a central point in the Meta AI controversy.

Competing platforms are already seizing on this moment. Open-source communities and rival LLMs have begun marketing themselves as “ethically consistent” or “open and transparent” in contrast to Meta’s evolving stance. The result? Meta may lose not just trust, but market share, as developers and users seek models with clearer ethical grounding. The anti-woke tech backlash may inadvertently benefit competitors.

Reduced Transparency in Decision-Making

Another consequence of politicizing AI is that it incentivizes opacity. When companies try to appease every side in a culture war, they often stop explaining how their systems actually work. Transparency becomes a liability. That’s exactly what we’re beginning to see in the Meta AI controversy.

Rather than publishing clear alignment protocols or disclosing which perspectives are prioritized, Meta has adopted vague language around “serving everyone.” But in practice, this evasion prevents meaningful audits of its AI systems. Users, developers, and regulators are left guessing how decisions are made, especially on sensitive issues like race, gender, and politics. This ties into the Meta AI bias discussion.

This lack of clarity is dangerous. It creates room for conspiracy theories, political backlash, and further polarization. And in the long run, it undermines Meta’s ability to claim it is building trustworthy or responsible AI systems. The Facebook AI politics are now more opaque.

Backlash from Users, Developers, and Regulators

Regulatory Scrutiny and GDPR Violations

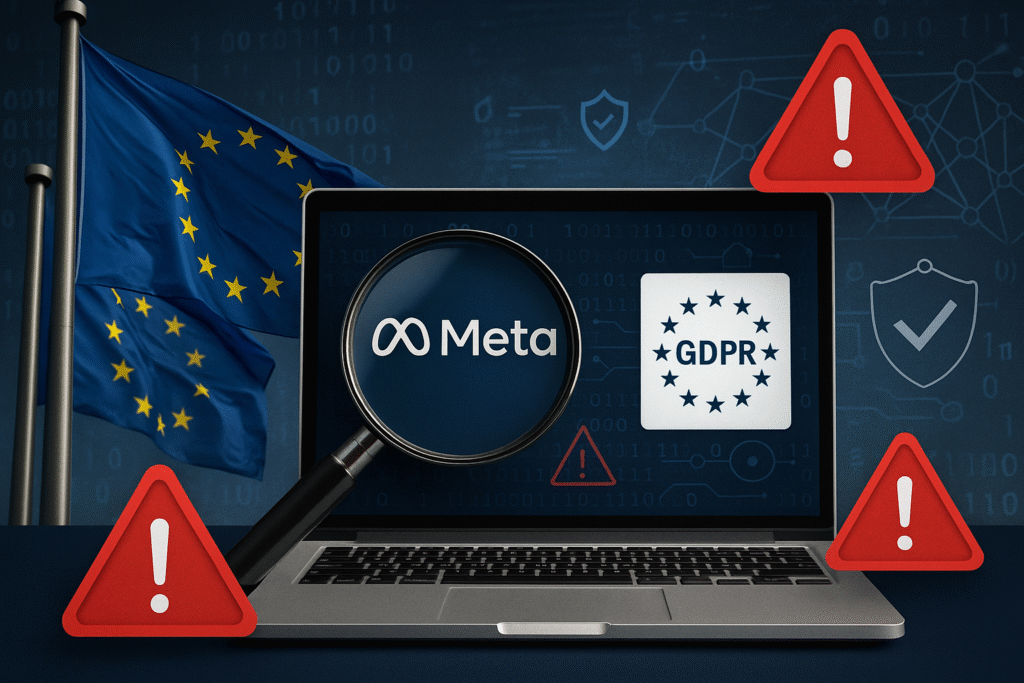

One of the most concrete consequences of the Meta AI controversy has come from European regulators. In mid-2025, Meta began using EU user data to train its LLMs, including Llama 4, without explicit, opt-in consent from individuals. This decision immediately triggered complaints from data privacy watchdogs, who argue that the practice violates the EU’s General Data Protection Regulation (GDPR), which mandates clear, affirmative consent for data processing.

The complaints are now being investigated across multiple EU jurisdictions. If these investigations result in adverse findings, Meta could face significant financial penalties, restrictions on AI deployments in the EU, or forced data deletion mandates. Privacy advocates, including prominent European digital rights groups, have also sounded the alarm over the broader implications: that Meta is setting a precedent for global AI data usage that skirts regulatory boundaries.

These challenges echo similar debates elsewhere, like the ongoing fight between Apple and the UK government over encrypted messaging access. As AI regulation tightens, Meta’s willingness to test legal gray zones could put its AI strategy at odds with global data protection norms. The anti-woke tech backlash doesn’t exempt them from these regulations.

For more, see our blog post: Apple vs. the UK: What the New Apple Encryption Backdoor Fight Means for Your Privacy and Quantum Cybersecurity in 2025.

Developer Discontent Over Pseudo-Open-Source Models

Meta has long attempted to position itself as a champion of “open-source AI,” particularly with its Llama models. But the developer community isn’t buying it. Llama 2 and 3 were released under restrictive licenses that limited commercial use, and Llama 4 has followed the same path. despite marketing that suggests otherwise. This feeds into the Meta AI controversy.

This has triggered a wave of backlash. Developers argue that Meta’s licensing terms violate the spirit of open-source collaboration, locking out smaller companies and academic researchers while giving Meta full control over the ecosystem. As a result, some high-profile contributors have abandoned the Llama ecosystem entirely.

The damage here isn’t just reputational, it’s functional. A thriving open-source community is essential for model improvement, plugin development, and third-party integrations. If developers view Meta’s models as a walled garden masquerading as open source, adoption will suffer. This directly impacts the Meta AI bias discussion as well.

User Skepticism and Platform Fatigue

Users are also responding negatively. The combination of political framing, regulatory challenges, and developer backlash has created a perfect storm of distrust. For many users, Meta’s AI strategy feels incoherent at best and manipulative at worst. This is another facet of the Meta AI controversy.

This skepticism shows up in multiple ways:

- Low confidence in AI outputs on politically charged topics

- Reduced user engagement with Meta AI features like chat assistants or content recommendations

- Increased scrutiny from civil society groups monitoring tech ethics and misinformation

Importantly, the backlash doesn’t break cleanly along ideological lines. While some right-wing groups welcome the anti-woke shift, they remain wary of Meta’s history of content moderation. Meanwhile, progressive users see the strategy as a betrayal of ethical commitments. The net result: Meta risks alienating everyone, while pleasing no one for long. This reflects the broader AI ethics debate in 2025.

5 Scenarios Where Meta’s Strategy Could Fail

Regulatory Pushback and Legal Exposure

Perhaps the most immediate threat to Meta’s “anti-woke” AI strategy is legal. By harvesting EU user data without explicit opt-in consent for AI training, Meta has walked directly into the path of GDPR enforcement. European privacy regulators are already investigating Meta’s practices, and a ruling against the company could result in multi-million euro fines, data use injunctions, and reputational fallout that spreads beyond Europe. This is a significant part of the Meta AI controversy.

This is not Meta’s first regulatory showdown, but the stakes are higher now. In the past, fines over data breaches or ad targeting were seen as the cost of doing business. But AI training practices touch on fundamental rights to privacy, identity, and control over personal data. If regulators set a precedent here, it could ripple across the entire AI industry. Meta’s gamble may invite a policy backlash that reshapes how AI can legally be trained in democratic nations. This strongly impacts the AI ethics debate in 2025.

Loss of Top AI Talent

Internally, Meta’s decision to pivot away from DEI and reframe AI alignment as “viewpoint diversity” has alienated some of its most talented researchers. Multiple members of the Llama team have already left for Mistral, a French AI startup, and other labs pursuing less politicized research goals. This directly relates to the Meta AI bias discussion.

Losing senior AI talent is more than a personnel issue. These researchers bring deep institutional knowledge, model interpretability expertise, and the credibility Meta needs to compete with OpenAI, Google DeepMind, and Anthropic. When they walk, they don’t just take their skills, they take Meta’s future competitive edge with them. This is a consequence of the anti-woke tech backlash impacting internal dynamics.

This brain drain suggests that the company’s internal culture is not aligned with the values of the researchers it needs to retain. And in an industry where trust, alignment, and interpretability are critical frontiers, this puts Meta at a significant disadvantage.

Declining Public Trust in Outputs

The public’s confidence in Meta’s AI outputs has always been fragile. But framing Llama 4 as an “anti-woke” response model adds a new layer of political controversy. Studies already show that disclosing AI use reduces public trust, especially when users suspect the model’s output may be manipulated or ideologically slanted. This is a key aspect of the Meta AI controversy.

By marketing its model as ideologically balanced, and moving away from ethics-aligned safety filters, Meta risks being seen not as neutral, but as reactionary. For users seeking objective, high-confidence answers, this perception is fatal. Even if the model’s performance is technically sound, the trust deficit may push users toward rival platforms that offer more clarity, more transparency, or a stronger ethical stance. This directly ties into the Facebook AI politics.

Friction with Developers and Open-Source Backlash

Developer backlash over Meta’s pseudo–open-source licensing is already fracturing the Llama ecosystem. Meta’s claims of open-source access are undermined by legal restrictions in its licensing agreements, leading developers to describe the system as a bait-and-switch. This is another area where the anti-woke tech backlash has consequences.

As the developer community looks to models that offer real transparency and community governance, such as those emerging from Hugging Face, Stability AI, and even Mistral, Meta’s standing in the open-source world is weakening. If enough developers turn away, Llama’s long-term relevance could fade fast. Without grassroots adoption and third-party tooling, Meta’s AI ambitions may stall even as competitors surge forward. This is part of the Meta AI bias discussion.

Failures in Moderation and Misinformation Control

Finally, by loosening alignment controls in the name of “ideological diversity,” Meta is taking a major risk with content safety. Its past moderation failures, ranging from election misinformation to violent content, are well documented. Weakening these guardrails, especially within generative systems, risks flooding its platforms with harmful, misleading, or conspiracy-driven outputs. This is a critical concern in the AI ethics debate in 2025.

This could have both platform-level and geopolitical consequences. Generative misinformation, when amplified at Meta’s scale, poses threats to public health, electoral integrity, and global security. For more context on this, see our post: AI-Powered Cyberwarfare in 2025: The Global Security Crisis You Can’t Ignore.

In short, Meta’s “anti-woke” AI strategy doesn’t just risk reputational damage, it opens the door to systemic failures with legal, societal, and national security implications. This further complicates the Meta AI controversy.

What Tech Leaders Should Learn from This

Neutrality Is Not Apolitical

One of the most dangerous misconceptions in the Meta AI controversy is the idea that “neutrality” can be achieved by simply removing ethical safeguards. In practice, neutrality in AI isn’t the absence of values, it’s the result of deliberate choices about training data, alignment goals, and output constraints. Meta’s strategy, framed as an attempt to “serve everyone,” sidesteps this reality. By stripping away DEI frameworks and ethical filters, the company hasn’t eliminated bias, it’s just shifted the balance of who benefits.

This has implications far beyond Meta. Tech leaders must recognize that depoliticizing AI does not mean abandoning values. It means making them explicit, testable, and accountable. Pretending to be apolitical often results in systems that quietly reinforce dominant ideologies, because they no longer have mechanisms in place to challenge them. This is vital for the AI ethics debate in 2025.

If trust and fairness are your goals, transparency, not neutrality, is your tool. This addresses the Meta AI bias concerns directly.

Regulatory Alignment Matters More Than Culture War Points

Meta’s attempt to win a culture war PR battle could cost it dearly in regulatory arenas. From GDPR violations in the EU to rising interest in U.S. AI safety legislation, the legal landscape for artificial intelligence is evolving fast. Companies that ignore this in favor of short-term ideological wins are playing a dangerous game. This is a key lesson from the anti-woke tech backlash.

Aligning your AI practices with emerging privacy, safety, and bias regulations isn’t just good ethics, it’s good business. The more AI becomes politicized, the more lawmakers will seek to clarify its boundaries. Tech leaders who see that shift coming and align early will be better positioned to shape the rules, not just follow them. This is crucial for Facebook AI politics.

And the consequences of failing to do so are already visible in Meta’s regulatory struggles, particularly as privacy watchdogs intensify scrutiny of how user data fuels generative models. The Meta AI controversy highlights this.

For more, read our piece DOGE IRS API Exposed: 5 Alarming Risks to Your Tax Data and Privacy

Talent Retention Hinges on Vision and Values

Ethical clarity isn’t just about external messaging, it’s a key internal signal to your top engineers and researchers. As the Meta AI bias backlash intensified, some of the company’s best AI talent jumped ship to competitors like Mistral. Their departure wasn’t about pay or prestige. It was about purpose.

AI researchers care deeply about alignment, interpretability, and ethics. If leadership abandons those priorities, it sends a signal that short-term politics matters more than long-term mission. That signal repels people who want to do meaningful, responsible work, exactly the people you need most to build reliable, safe, and competitive AI systems.

In an industry where innovation depends on retaining scarce technical talent, a values vacuum is a direct business risk. This is a direct consequence of the anti-woke tech backlash.

Anticipate, and Respect, Legitimate DEI Critiques

While DEI frameworks are critical for ensuring equity and representation in AI development, leaders should acknowledge that critiques of these models are not always rooted in bad faith. Overzealous implementation can backfire if it introduces opaque filters, suppresses legitimate dissent, or fails to evolve with cultural norms.

For instance, some critics argue that early AI safety efforts relied on centralized ethics teams that acted without sufficient external review, effectively creating black-box systems that filtered outputs in ways users didn’t understand. Others highlight that certain DEI approaches may unintentionally perpetuate bias by defining “harm” too narrowly or too ideologically. This contributes to the AI ethics debate in 2025.

These concerns don’t justify abandoning equity goals, but they do highlight the importance of transparency, pluralism, and iterative design in AI alignment. Ethical AI doesn’t mean enforcing a single worldview. It means building systems that are accountable, explainable, and adaptable, especially as social values evolve. This helps address the Meta AI bias.

By acknowledging legitimate concerns and adapting to them in good faith, tech leaders can avoid binary traps like “woke vs. anti-woke” and instead focus on creating AI that earns public trust through clarity and accountability.

Public Trust Must Be Earned, Not Assumed

Finally, the broader lesson for tech leaders is that public trust in AI isn’t a natural byproduct of scale or name recognition, it’s something you earn, repeatedly, through consistency and clarity.

Meta’s attempt to reframe its AI as ideologically balanced may win points in some circles, but it undermines the confidence of users who no longer know what rules guide its outputs. The cost of that uncertainty? Users disengage, developers defect, and regulators crack down. This is the core of the Meta AI controversy.

To counter this, AI companies must double down on ethical transparency, stakeholder dialogue, and public communication. Systems must be auditable. Alignment must be explainable. Goals must be declared, not buried in press releases.

For leaders looking to navigate these challenges, we recommend studying trusted AI practices and reading our post: AI Cyberattacks Are Exploding: Top AI Security Tools to Stop Deepfake Phishing & Reinforcement Learning Hacks in 2025, which shows how ethical design and technical rigor work hand in hand to prevent real-world harm.

Conclusion & Future Outlook

Meta’s anti-woke AI strategy is more than a brand refresh, it’s a high-stakes gamble on ideology, influence, and market positioning in the middle of an intensifying global debate over artificial intelligence. On the surface, the move to roll out Llama 4 as a “viewpoint diverse” model, coupled with the termination of internal DEI programs, seems designed to quiet conservative critics and recast Meta as a politically neutral player in a polarized industry.

But neutrality, when performed rather than practiced, often breeds distrust. The Meta AI controversy reveals the cost of abandoning ethical alignment in favor of ambiguous “balance.” As we’ve seen, this shift has already triggered regulatory scrutiny in Europe, triggered backlash from open-source developers, and led to the departure of top-tier AI researchers who no longer see the company as aligned with their values.

The Facebook AI politics underlying this pivot reflect broader tensions in the tech industry, where ethical commitments are too often sacrificed for optics, and complex social questions are reframed as product decisions. With the AI ethics debate 2025 gaining global attention, Meta’s decisions won’t just affect its brand, they may reshape the regulatory and social norms that govern how AI can and should be deployed.

Looking ahead, Meta’s AI future may be defined not by how many users adopt Llama 4, but by how many trust it, and how many regulators decide that trust has been violated. With global elections, new data privacy frameworks, and rising geopolitical risks all intersecting with AI deployment, the consequences of Meta’s strategy could extend far beyond Silicon Valley. The anti-woke tech backlash has truly sparked a wider discussion.

For other tech leaders, the lesson is simple: trust and alignment aren’t afterthoughts. They’re competitive advantages. The next generation of AI dominance will belong not to the fastest, but to the most accountable.

Key Takeaways

- Meta’s Llama 4 marks a strategic departure from DEI-focused AI development, sparking global debate and contributing to the Meta AI controversy.

- Framing AI as “anti-woke” introduces major trust and credibility risks, even among ideologically sympathetic audiences, impacting the Meta AI bias.

- Regulatory exposure in Europe over user data practices could lead to fines, restrictions, and lasting damage, a key point in the AI ethics debate in 2025.

- Developer backlash over pseudo-open-source licensing is hurting Meta’s standing in the AI ecosystem, related to the anti-woke tech backlash.

- Internal departures of top researchers suggest that abandoning ethical commitments comes with serious talent costs, influenced by Facebook AI politics.

- Tech leaders must focus on transparency, stakeholder alignment, and values-based design to earn lasting public trust.

FAQ

Q1: What is Meta’s anti-woke AI strategy?

Meta has shifted its AI development to prioritize “viewpoint diversity” and abandoned DEI-based filters. This includes launching Llama 4 and ending internal DEI initiatives, positioning the company as ideologically neutral.

Q2: Why are developers criticizing Meta’s open-source claims?

While marketed as open-source, Llama’s licensing restrictions limit commercial use and violate open-source principles, frustrating developers and academic users.

Q3: What legal issues is Meta facing in Europe?

Meta is under investigation for training its AI on EU user data without proper opt-in consent, in potential violation of GDPR standards.

Q4: How could this strategy hurt Meta’s reputation?

The political framing of its AI model undermines public confidence in the system’s objectivity and transparency, fueling skepticism and user disengagement.

Q5: What can other tech companies learn from this?

Companies should treat AI alignment as a foundational issue, not a PR exercise, prioritizing ethics, regulatory compliance, and clarity to avoid long-term fallout.