AI Doesn’t Sleep—And Brute Force Attacks Don’t Need to Either

Brute force attacks used to be a waiting game. Hackers would launch endless combinations of passwords, hoping one would eventually break through. It took time, patience, and usually a poorly secured target.

Now, artificial intelligence has rewritten the rules.

Welcome to the new era of password cracking—one where hackers aren’t just guessing. They’re predicting. They’re adapting. And they’re doing it at speeds no human could ever match.

At Quantum Cyber AI, we’ve been tracking the disturbing evolution of these tactics in real time. What used to take days or weeks can now be accomplished in minutes—or even seconds—thanks to AI-trained models capable of intelligently navigating the most common human password patterns.

In this post, we’ll break down how AI-powered brute force attacks work, what tools and models are leading the charge (like PassGAN), why even strong passwords are becoming vulnerable, and—most importantly—what you can do to stay safe.

🔥 AI threats evolve fast. So should you. Subscribe.

WHAT IS AN AI-POWERED BRUTE FORCE ATTACK?

The Old School: Dictionary Attacks and Guesswork

Traditional brute force hacking was like trying every key on a massive ring—eventually, one would work. This method involved trying all possible character combinations or cycling through common words in a dictionary. While effective on weak passwords, it was resource-heavy, easy to detect, and time-consuming.

Enter AI: From Guessing to Predicting

An AI-powered brute force attack doesn’t rely on randomness. Instead, it uses deep learning models trained on millions of leaked credentials to learn how people actually construct their passwords. Tools like PassGAN (Password Generative Adversarial Network) are leading this shift. PassGAN uses a machine learning architecture originally developed for generating realistic-looking images—and applies it to generating password guesses that look just like what a human would use.

Key Features of AI-Powered Brute Force Tools:

- Predictive: They learn from massive real-world password dumps, like those from LinkedIn, RockYou2021, or Twitter.

- Adaptive: They refine guesses based on success/failure feedback loops.

- Scalable: They can attack thousands of login pages or systems in parallel.

- Disguised: They simulate human typing speed and login behavior to bypass detection.

🔍 Real-World Example:

Security researchers using PassGAN found that the AI cracked 51% of real user passwords in under two minutes when trained on publicly available leaks.

WHY THESE ATTACKS ARE HARDER TO STOP

1. Speed + Scale = Mayhem

With cloud GPU instances or local NVIDIA setups, AI password cracking models can now test billions of combinations per second. Combined with distributed botnets, this allows hackers to conduct large-scale, stealthy attacks without triggering alarms.

A password like “Summer2023!”, once considered reasonably secure, is now among the first guesses generated by AI models trained on human behavior and leaked password patterns.

A recent study found that AI-powered tools can crack 51% to 65% of common passwords—including those using seasons, years, or simple substitutions—in under a minute using tools like PassGAN and modern GPUs.

2. Tools Are Open Source—and Everywhere

Platforms like GitHub host entire repositories of AI-based password cracking frameworks, complete with training data and tutorials. Threat actors no longer need custom tools—they just need time to fine-tune publicly available models.

Popular AI-Powered Password Cracking Tools Include:

- PassGAN – A deep learning model trained to generate realistic passwords.

- GptCrack – Uses large language models to guess passwords based on human patterns.

- GANcracker – Similar to PassGAN, but optimized for GPU-based training and inference.

- Hashcat + AI Wordlists – Combines traditional cracking with machine-generated password lists from AI.

3. Humans Are Predictable

Despite constant warnings, people continue to:

- Reuse passwords across sites

- Include birthdays, pet names, or years in passwords

- Choose common substitutions like “@” for “a” or “0” for “o”

AI thrives on these habits. The more predictable you are, the faster it wins.

💡Learn more in our breakdown of how cybercriminals are using AI to automate password cracking and attack workflows in AI-Assisted Hacking: How Cybercriminals Are Weaponizing AI in 2025.

Real-World Cases: When AI Brute Force Becomes a Front-Door Key

AI-powered brute force attacks are no longer theoretical—they’re being deployed in the wild, and the cybersecurity community has started to take notice. Here are some documented and emerging examples of how AI-driven password cracking is moving from hacker forums to real-world exploitation:

1. PassGAN on GitHub: From Research Tool to Hacker Toolkit

In 2017, researchers from the Stevens Institute of Technology and NYU developed PassGAN, a generative adversarial network trained on the RockYou password leak dataset. Its goal? To learn password patterns without predefined rules. The result was a model that could generate millions of plausible, human-like passwords—far better than traditional brute force scripts that relied on dictionary lists.

By 2021, multiple GitHub forks of PassGAN were openly available, optimized for GPU acceleration. In underground hacking communities, the model was repurposed for:

- Credential stuffing campaigns using leaked email databases

- Password spraying attacks on admin portals and corporate VPNs

- Targeting GitHub Actions and CI/CD environments using likely developer password combos (e.g., “Node123!”, “Docker2020!”, or variations with birth years)

Researchers found that PassGAN could guess over 30% of passwords in some enterprise environments when combined with social engineering data (names, birthdates, job titles).

2. AI in Cloud Attacks: A Growing Threat to Corporate Systems

AI-enhanced brute force and credential attacks are being actively deployed against cloud services and corporate environments.

📍 Case Study: Yum! Brands Ransomware Breach (January 2023)

In early 2023, Yum! Brands—the parent company of KFC, Taco Bell, and Pizza Hut—was hit by a ransomware attack that forced the temporary closure of nearly 300 locations in the United Kingdom. While Yum! did not disclose full technical details, post-incident analysis revealed that AI-powered automation was used to identify, prioritize, and extract sensitive data during the breach.

According to cybersecurity firm Oxen Technology, attackers used AI models to automatically classify high-value internal data and accelerate the data exfiltration process. The breach exposed corporate records and employee information, highlighting how even traditional ransomware operations are beginning to integrate machine learning to improve effectiveness and speed1.

This attack underscores how AI doesn’t just enhance password guessing—it expedites the entire attack chain, from initial access to data extraction and monetization.

3. Corporate Credential Reuse and Predictive AI

Even Fortune 500 companies aren’t immune to password reuse. A 2022 audit by a cybersecurity firm found that over 50% of employees reused passwords across corporate and personal accounts—often with slight modifications (e.g., “WorkPass2022!” vs. “WorkPass2023!”).

AI models trained on breach corpuses like LinkedIn, Dropbox, and Canva leaks are now able to predict these mutations with alarming accuracy. In one red team engagement, the use of an AI-assisted permutation engine allowed penetration testers to breach:

- A secure internal HR portal

- A shared GSuite account

- A legacy backup server

All through different variations of a single reused password structure.

4. Simulating Human Behavior: Beating Login Throttles with AI

Many modern authentication systems rely on rate limiting, CAPTCHAs, or behavioral heuristics—such as typing speed and mouse movement—to detect and block brute force attacks. But AI is increasingly capable of mimicking human behavior to evade these defenses.

Techniques include:

- Simulated keystroke delays to mimic natural human typing patterns

- IP rotation and user-agent spoofing to resemble normal geographic and browser-based access

- Behavioral fingerprint emulation—such as matching mouse trajectories and screen interaction speeds—using machine learning to bypass bot-detection systems

While specific academic results on AI emulating typing biometrics in login environments remain limited, there is growing recognition in the cybersecurity field that AI-generated traffic is becoming indistinguishable from human input.

A 2024 article from Tech Xplore reports that advanced AI models are now able to successfully bypass behavioral bot detection tools, prompting cybersecurity experts to to warn that visual bot challenges may no longer be sufficient defenses.

Additionally, AI-assisted phishing kits like EvilProxy and Muraena now automate reverse proxies that capture real-time credential input, emulating human behavior as it passes through detection systems undetected.

Why AI Keeps Winning: The Human Element

Despite advancements in cybersecurity infrastructure, human behavior remains one of the most significant vulnerabilities—and AI is increasingly built to exploit it.

💡 Guide on AI cybersecurity best practices for password hygiene and risk mitigation – 7 AI Cybersecurity Best Practices for Good Cyber Hygiene

📌 Password Reuse and Weaknesses

Research consistently shows that a majority of users still engage in high-risk password habits:

- 64% of users reused passwords across multiple sites, including business and personal accounts, according to the 2023 Annual Identity Exposure Report from SpyCloud.

- In 2023, NordPass published its annual password study, revealing that common passwords like “123456,” “password,” “admin,” and “qwerty” were still among the most used globally.

- Many users apply only slight modifications to old passwords (e.g., changing the year), which makes it easy for AI models trained on real-world data breaches to guess the next version.

These patterns make it easier for AI-driven brute force tools like PassGAN to succeed. According to a study published on arXiv, when PassGAN was trained on leaked password datasets, it was able to crack over 51% of user passwords in under 60 minutes.

📌 Personalized Guessing via Social Data

AI-assisted brute force attacks become more effective when they leverage publicly available personal data to generate highly relevant guesses:

- A 2023 Proofpoint report noted the rise of “personalized brute force” attacks, where hackers incorporate publicly available information like job titles, birthdates, and locations into AI-powered password generation.

Attackers may train models on leaked datasets and use variations like FirstName+BirthYear!, company names, or known hobbies—reducing the number of guesses needed for a successful breach.

🧠 How AI Bypasses MFA and Detection

While multi-factor authentication (MFA) is one of the most effective defenses available, attackers are finding ways to bypass or exploit it with the help of AI.

📌 Simulated Behavior to Avoid Detection

Security systems often detect brute force attacks by monitoring typing speed, mouse movement, IP address changes, or login frequency. But AI is becoming capable of emulating human behavior in these areas:

- A 2023 study from researchers at ETH Zurich demonstrated that AI systems could bypass Google’s reCAPTCHAv2 with near-perfect accuracy. The researchers used a vision-language model to solve image-based challenges, raising concerns about the long-term viability of CAPTCHA as a security measure.

- Advanced phishing kits like EvilProxy and Muraena allow attackers to intercept login sessions by proxying user input, which includes simulating real-time behavior like typing cadence and mouse tracking.

📌 Phishing for MFA Codes

In many cases, attackers are not cracking MFA—they’re stealing it using phishing or social engineering techniques enhanced by AI.

- In 2023, the Cybersecurity and Infrastructure Security Agency (CISA) documented how “MFA fatigue” attacks were used to breach high-profile organizations like Uber and Cisco, where users were bombarded with MFA prompts until one was accepted.

- At the same time, AI-based tools such as WormGPT and FraudGPT emerged on dark web forums, offering large language model-based phishing scripts capable of generating personalized spear-phishing emails that mimic legitimate communications. These messages are increasingly used to trick users into handing over MFA codes in real-time.

💡 Security tools that counter AI-enhanced phishing and MFA evasion – AI Cyberattacks Are Exploding

What You Can Do: Defensive Strategies for Individuals and Organizations

While AI-powered brute force attacks are becoming more sophisticated, there are still practical and effective ways to protect yourself and your organization. These strategies don’t require advanced technical expertise, but they do require consistency, planning, and ongoing vigilance.

Use a Password Manager

Password managers can generate and store long, complex, and unique passwords for every account. This eliminates the need for human-created patterns, which are often predictable.

In its 2023 report, the National Institute of Standards and Technology (NIST) recommended password managers as one of the most effective ways to prevent account compromise, especially when paired with multi-factor authentication.

Popular tools include Bitwarden, 1Password, and Proton Pass. Many enterprise solutions also integrate password managers with single sign-on (SSO) and security dashboards.

Enable Multi-Factor Authentication (MFA) Wherever Possible

MFA significantly reduces the chance of unauthorized access, even if a password is compromised. The FBI, CISA, and NIST all recommend MFA as a core cybersecurity practice.

SMS-based MFA is better than no MFA at all, but more secure options include time-based one-time password (TOTP) apps like Google Authenticator, or hardware security keys like YubiKey.

Source: CISA MFA Fact Sheet

https://www.cisa.gov/sites/default/files/publications/MultiFactor-Authentication-Fact-Sheet.pdf

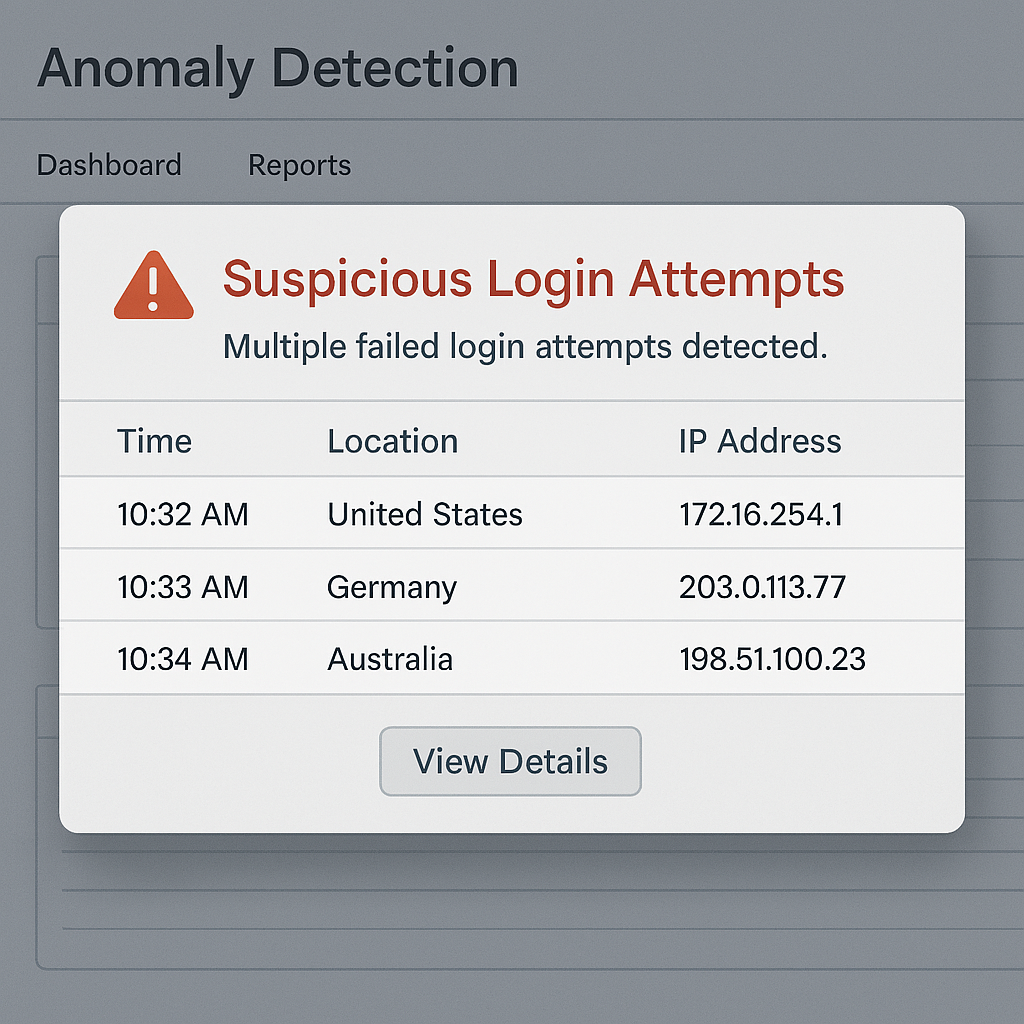

Monitor and Respond to Unusual Login Activity

Many brute force attacks generate subtle signs before a breach. Organizations should deploy behavior-based intrusion detection systems (IDS) that monitor logins for:

- Unusual times or locations

- IP addresses from unexpected regions

- Rapid login failures followed by success

Tools like CrowdStrike Falcon, Microsoft Sentinel, or open-source options like Wazuh can help flag this behavior.

According to IBM’s 2023 Cost of a Data Breach Report, the average time to detect a breach was 204 days. Early anomaly detection can drastically reduce the scope and cost of an incident.

Source: IBM 2023 Cost of a Data Breach Report

Avoid Password Reuse at All Costs

Credential stuffing attacks rely on the fact that many people reuse the same credentials across multiple sites. If a single website is compromised, attackers can reuse that email and password combination elsewhere.

In a 2023 analysis by SpyCloud, 25 percent of exposed passwords were found to be reused in enterprise environments.

Even modifying a password slightly (such as changing the number at the end) is ineffective, since AI tools are trained to generate those common mutations.

Train and Educate Users

Employee awareness is critical. Cybersecurity training should include:

- Avoiding obvious or reused passwords

- Recognizing phishing attempts

- Using password managers and MFA

- Reporting suspicious login activity

According to the Verizon 2023 Data Breach Investigations Report, 74 percent of breaches involved a human element, including social engineering and credential misuse.

The Future of Brute Force Attacks: Where AI Is Headed Next

As artificial intelligence continues to evolve, so do the capabilities of brute force attack models. What began as a way to crack text-based passwords has already started moving beyond alphanumeric guessing into more complex and sensitive forms of authentication.

💡 How nation-state actors are leveraging AI for advanced cyberwarfare tactics – AI-Powered Cyberwarfare in 2025

Targeting Biometric Systems

AI models are increasingly being applied to spoof biometric data—such as fingerprints, facial scans, and voice recognition systems.

- In 2023, researchers at Tencent’s Security Lab demonstrated that AI-generated synthetic fingerprints, called “DeepMasterPrints,” could match with over 20 percent of stored fingerprint templates in a large-scale fingerprint authentication system.

- Researchers from Tel Aviv University in 2022 showed that deepfake audio could reliably bypass voice-based authentication systems in banking applications, raising concerns about the future of voice biometrics.

💡 Deepfake audio could reliably bypass voice-based authentication systems – AI Voice-Cloning Scams

Predicting Password Behavior Before It Happens

Advanced generative models may soon be able to predict future passwords or password changes based on behavioral trends. These include:

- Seasonal password choices like “Spring2025!”

- Passwords that reflect known user habits or life events (e.g., child’s name + year)

- Time-based rotations (e.g., changing “Secure2024” to “Secure2025” annually)

Such prediction engines could be trained using behavioral data scraped from breached sources, social media, and enterprise credential repositories.

Cracking Encrypted Communications

Though still highly theoretical, some researchers are beginning to model how AI could assist in reducing the computational complexity required to brute force certain types of encryption.

- In 2023, researchers at the University of Maryland tested whether reinforcement learning models could identify weaknesses in cryptographic key generation patterns in pseudo-random number generators.

While public-key encryption remains secure in practice for now, the rise of quantum computing combined with AI pattern recognition may eventually accelerate the discovery of encryption vulnerabilities.

💡 How school systems are vulnerable to AI-driven attacks due to weak infrastructure – School Cybersecurity Under Attack in 2025

Conclusion

AI-powered brute force attacks represent a significant shift in the cybersecurity threat landscape. These are no longer slow, noisy password-guessing scripts—they’re adaptive, predictive, and increasingly effective against both individuals and enterprises. As tools like PassGAN evolve and integrate with behavior-simulating systems, even relatively strong passwords can become vulnerable.

The key to staying ahead isn’t just technology—it’s behavior. Adopting password managers, enabling MFA, reducing reuse, and continuously monitoring login behavior can drastically improve your resilience against these attacks.

Cybersecurity in 2025 isn’t about staying one step ahead—it’s about not falling several steps behind. AI may be changing the rules, but the fundamentals of strong digital hygiene still apply.

🔥 Stay One Step Ahead of the Hackers. Get the latest AI-driven cyber threats and defense strategies delivered straight to your inbox. No spam, just smart insights. Sign up for our cybersecurity newsletter and updates here.

Key Takeaways

- AI models like PassGAN are trained on massive datasets of leaked passwords, allowing them to guess real user credentials with startling accuracy.

- Predictability and password reuse remain the biggest enablers of brute force success.

- MFA, especially hardware-based or authenticator apps, can reduce brute force risk—though AI is developing ways to mimic user behavior or phish MFA tokens.

- Future threats may involve AI targeting biometric systems, predicting password behavior based on context, or even accelerating cryptanalysis.

- Open-source access to AI hacking tools lowers the barrier for attackers and increases risk for organizations of all sizes.

FAQ: AI-Powered Brute Force Attacks

Q: Is AI really better than traditional brute force tools?

Yes. Traditional tools work through combinations blindly. AI models like PassGAN generate guesses based on real human behavior, making them far more efficient and effective.

Q: Can MFA stop AI-powered brute force attacks?

MFA is still one of the best defenses, especially hardware-based or app-generated codes. However, attackers are increasingly using phishing and behavior simulation to bypass or steal MFA tokens.

Q: Is this something only governments or large corporations should worry about?

No. Because many AI hacking tools are open source, small businesses and individuals are just as likely to be targeted—especially if using common passwords or reusing credentials across sites.

Q: Are biometric logins safer than passwords?

Biometrics are generally harder to replicate but not immune. Research into synthetic fingerprints and deepfake voice attacks shows that AI may eventually pose serious threats to biometric systems as well.