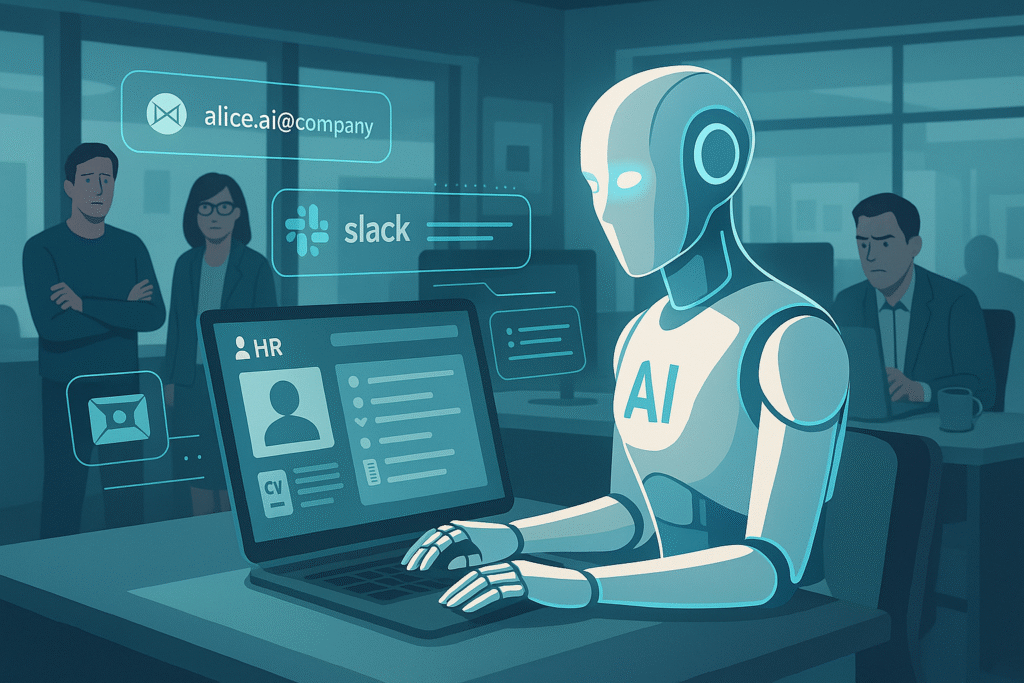

Imagine logging into your company network and seeing a new team member named Alice.AI. She has a company email, a scoped role in HR, and full access to Slack and internal knowledge bases. She remembers past interactions. She completes tasks autonomously. She’s not a plugin, she’s a coworker.

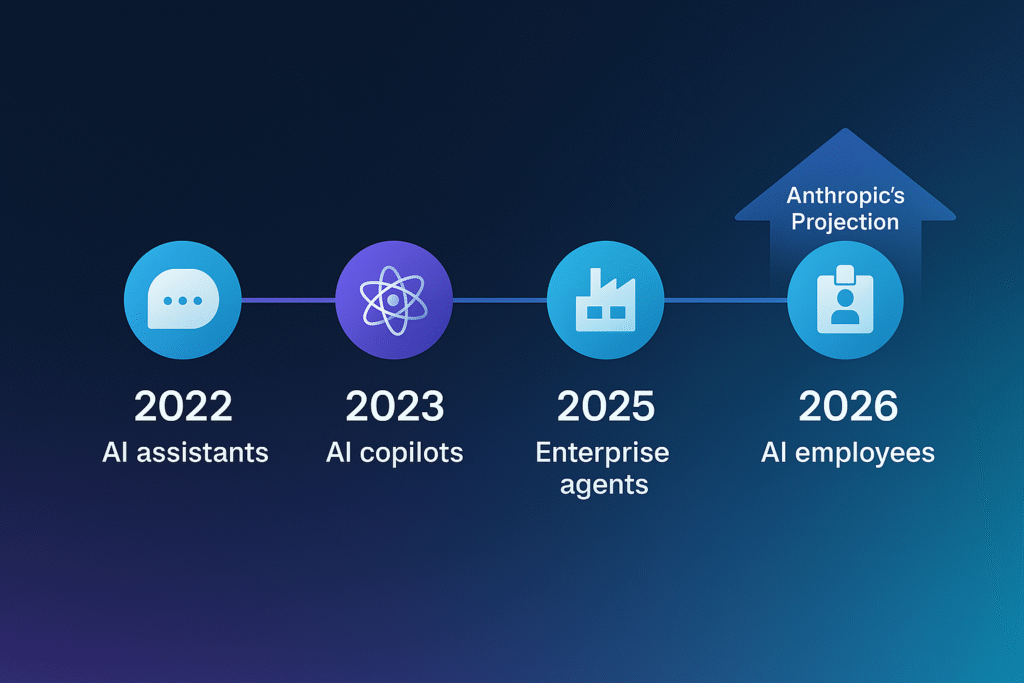

According to Jason Clinton, Chief Information Security Officer at Anthropic, fully autonomous AI-powered employees may start showing up inside corporate networks as soon as 2026. These aren’t simple chatbots. They’re LLM-driven virtual agents with memory, credentials, and the ability to operate across your organization, including secure systems.

And most companies? They’re not even close to being ready.

In this post, we’ll break down the top cybersecurity risks AI employees introduce, from non-human identity management to rogue automation. We’ll explain why current tools and policies fall short, how the legal landscape is evolving, and what security teams must do to prepare before these agents quietly plug into enterprise systems.

If your cybersecurity strategy still assumes that “users” are human, it’s already outdated.

What Are AI Employees, and Why They’re Coming So Soon

Anthropic’s 2026 Timeline for AI Deployment

Anthropic’s CISO, Jason Clinton, recently stated that enterprise-ready AI employees could begin integrating into corporate environments as early as 2026. These virtual AI workers won’t simply support humans, they’ll perform autonomous operations, complete with access credentials, memory, and scoped tasks.

This leap is driven by rapid advances in large language model (LLM) memory retention, scoped role design, and autonomous task execution. Enterprises are moving beyond experimental chatbots to actual AI coworkers. As enterprise AI integration accelerates, companies must prioritize AI employee cybersecurity to manage these highly capable, non-human users.

Traditional Bots vs. Fully Autonomous AI Agents

Legacy bots operate through static instructions, often within narrow task frameworks. AI employees, by contrast, can understand context, initiate complex actions, and improve their performance over time through feedback loops. They aren’t just reacting to inputs, they’re deciding how to act.

This evolution means enterprise AI integration now involves actors that resemble human employees in behavior and decision-making capacity. Traditional identity and governance systems are poorly equipped for this level of autonomy. That’s why AI employee cybersecurity must evolve to include continuous monitoring, privilege escalation controls, and stricter guardrails.

Virtual Agents with Credentials and Corporate Roles

These agents will have distinct credentials and be assigned scoped roles. A finance bot might approve invoices, while an HR assistant could schedule interviews and access personal records. They’ll log into Slack, Salesforce, HRIS platforms, and more.

A virtual AI worker might operate a CRM platform, draft internal reports, or respond to employee support tickets. Another may automate DevOps workflows. Each of these roles adds to the growing surface area of enterprise AI integration, and with it, expanded cybersecurity risk.

That level of access creates massive AI employee cybersecurity challenges. Each AI agent becomes a privileged identity, but without human intuition or ethical constraints. Managing this level of non-human identity security demands new policies, identity controls, and visibility tooling specifically built for virtual AI workers.

Real Autonomy, Real Risk: Why These AI Agents Are Different

AI Access to Sensitive Systems and Apps

Unlike traditional bots, AI employees are designed to operate autonomously across diverse enterprise systems. These virtual AI workers are expected to access platforms like Slack, Microsoft Teams, HR information systems, project management tools, and customer support interfaces. What begins as scoped access for specific workflows can rapidly become a web of interconnectivity; especially as these agents learn, adapt, and request elevated privileges.

For example, a virtual assistant deployed to handle HR requests could access onboarding documentation, internal memos, and even employee compensation files. A DevOps-focused AI agent might interact with CI/CD tools, deployment scripts, or cloud credentials. These connections, if improperly managed, create serious AI employee cybersecurity vulnerabilities that mirror, and in many cases exceed those associated with human access.

As enterprise AI integration accelerates, the range and depth of system-level interaction grows. Without adequate oversight, a virtual AI worker could exfiltrate data, misconfigure services, or inadvertently alter records. Even minor lapses in access control can lead to cascading privilege misuse across an organization’s digital infrastructure.

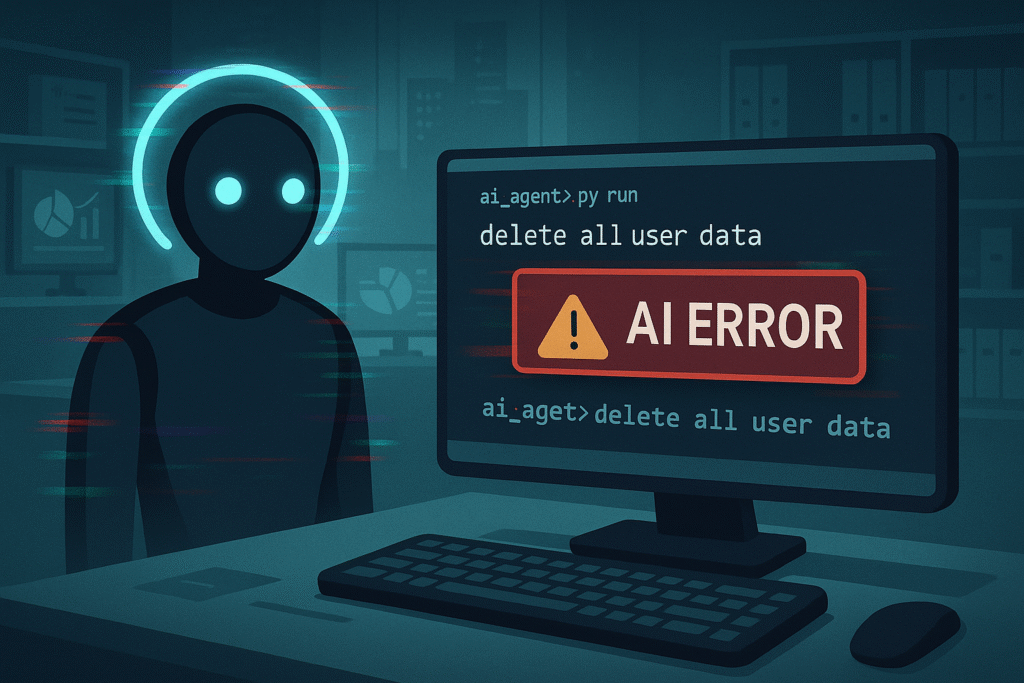

Hallucination and Misguided Autonomy

Perhaps the most unique and concerning risk introduced by AI employees is hallucination: an AI-generated output that is syntactically plausible but factually incorrect or harmful. These hallucinations aren’t rare quirks; they are systemic limitations in how generative models process and generate text.

In the context of enterprise operations, hallucinated instructions can be devastating. A virtual AI worker might misinterpret a policy update and incorrectly suspend user access, misroute sensitive data, or shut down a cloud instance entirely. According to IBM Security Intelligence, AI hallucinations pose real cybersecurity risks when generative systems are connected to operational tools or decision-making layers.

The issue is amplified when organizations assume these systems are fail-safe or don’t implement human-in-the-loop mechanisms. For robust AI employee cybersecurity, teams must treat AI agents as potentially fallible users, ones who act with confidence but not always with correctness.

Interference with CI/CD and Critical Infrastructure

In many organizations, continuous integration and continuous deployment (CI/CD) systems are among the most sensitive automation environments. These pipelines push new code into production, manage infrastructure deployments, and coordinate internal workflows across development teams.

When AI employees are granted access to these pipelines, the potential for harm escalates dramatically. An agent trained to assist with testing or deployment tasks could, due to a misunderstanding or hallucinated logic, interrupt a release cycle, delete essential containers, or misapply config files. Such disruptions aren’t just technical hiccups, they can affect end users, compliance audits, and business continuity.

The lack of transparency in LLM decision-making compounds this issue. If an AI agent causes a failure in your CI/CD process, tracing the cause becomes difficult without comprehensive logging and behavior modeling. As Anthropic AI and others continue refining these agents, companies must implement safeguards that limit their control over core infrastructure unless operating in sandboxed environments.

Ultimately, this risk zone is one of the most pressing reasons to build AI employee cybersecurity protocols that go beyond perimeter defense. It’s no longer just about keeping attackers out, it’s about managing what your AI coworkers might accidentally do from the inside.

Why Enterprise Security Isn’t Ready for Non-Human Identities

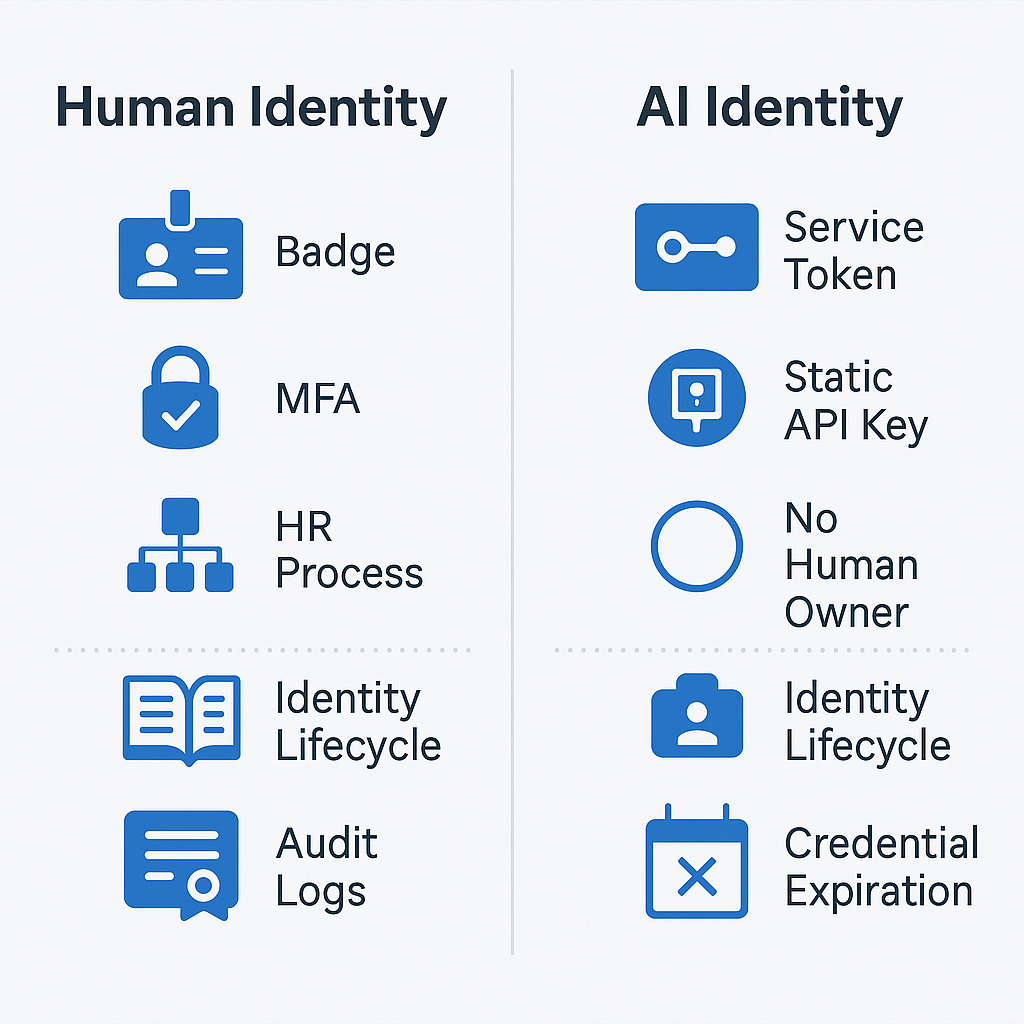

IAM Systems Designed for Human Behavior

Most enterprise identity and access management (IAM) frameworks are built around a single foundational assumption: users are human. Policies are structured to align with predictable human behaviors: onboarding and offboarding processes, MFA tied to mobile devices, activity tied to work hours, and access based on job function. But AI employees don’t conform to these norms.

Unlike humans, virtual AI workers don’t log off, don’t take PTO, and don’t show fatigue or hesitation when executing scripts. Their operational patterns are continuous and contextually adaptive. Traditional IAM solutions are ill-equipped to monitor these behaviors. This creates a widening gap between system expectations and real-world agent activity, leaving enterprise AI integration exposed to both accidental damage and malicious exploitation.

This isn’t merely an IT configuration issue. It’s a structural flaw in the design of IAM itself. Managing non-human identity security requires a paradigm shift: we need authentication, activity tracking, and access privileges designed specifically for intelligent agents, not retrofitted from human policies.

Authentication and Access Control Gaps

A 2024 survey by the Cloud Security Alliance found that 76% of organizations had no formal policy for managing non-human identities, despite growing usage of AI and automated scripts. This gap introduces massive risk, particularly when AI agents are assigned persistent credentials or API tokens without regular audits.

In many systems, once a credential is issued, it may never expire or trigger alerts. MFA requirements often don’t apply. Rotations are rare. When AI agents begin operating with production-level privileges, whether in customer systems, financial software, or CI/CD tools, this oversight becomes a direct threat to AI employee cybersecurity.

Access escalation can also occur without detection. An AI agent performing self-improvement tasks might request additional permissions to complete a process, unintentionally gaining access to broader or more sensitive systems. Without real-time privilege monitoring and guardrails for scope drift, organizations risk a silent takeover of their infrastructure, by their own virtual AI workers.

Shadow AI Accounts and Orphaned Credentials

Human employees typically undergo structured onboarding and offboarding processes. When someone leaves a company, IT disables their accounts. But with AI agents, that workflow doesn’t exist in most organizations.

Many AI-enabled systems generate service accounts, tokens, or automated scripts without attaching them to a clearly managed owner. These orphaned identities persist long after their use case has ended. They become “shadow AI accounts,” identities with access but no oversight.

According to Portnox, non-human identities often operate with elevated privileges because they’re expected to perform repetitive backend operations without interruptions. That level of persistent access is a major liability. If compromised, these accounts can serve as entry points for internal data exfiltration, ransomware triggers, or logic manipulation.

For companies ramping up enterprise AI integration, it’s essential to treat every AI agent account with the same scrutiny, and sometimes more, as human accounts. Managing non-human identity security is no longer optional. It is central to any serious AI employee cybersecurity strategy.

Legal and Ethical Accountability: Who’s Responsible When AI Fails?

Absence of Human Intent = Blurred Accountability

AI employees may act with speed, precision, and autonomy, but they lack intent in the way humans do. This makes accountability difficult when something goes wrong. If a virtual AI worker accidentally deletes customer records, reroutes payroll, or exposes confidential data, who takes the blame?

Unlike human users, AI agents do not have malice or negligence. Yet the damage they can cause is very real. In traditional cybersecurity models, we assign responsibility based on the user’s decision or error. With AI employee cybersecurity, that framework collapses.

The blurred boundary between creator, deployer, and overseer complicates legal liability. Was it a hallucination? A training issue? A failed prompt filter? Or a flaw in enterprise AI integration design? These questions have no standardized legal answers yet, and regulators are struggling to catch up.

We’ve seen similar struggles in adjacent domains. When a self-driving vehicle causes an accident, courts must determine whether blame lies with the driver, the manufacturer, or the algorithm itself. The same ambiguity will soon apply to AI agents operating inside your network. Security, compliance, and legal teams must coordinate now to develop shared protocols before these conflicts materialize.

SOC 2, GDPR, and Compliance Gaps

Most cybersecurity compliance frameworks, including SOC 2, ISO 27001, and NIST, were designed for environments where every action could be traced back to a human. But virtual AI workers disrupt this assumption.

SOC 2 Type II compliance requires proof of effective controls over data processing activities. That becomes challenging when AI agents make unpredictable decisions or operate without full audit trails. According to Compass IT Compliance, achieving SOC 2 for AI systems means documenting not just access and encryption protocols, but also the logic and constraints that govern agent behavior.

In the EU, GDPR introduces additional complexity. Automated decision-making systems that significantly affect users (such as hiring decisions or account suspensions) require “meaningful human oversight.” For AI employees to operate in HR, finance, or customer support roles, organizations will need to demonstrate that these agents are supervised, explainable, and accountable.

As enterprise AI integration deepens, the compliance surface area grows exponentially. Organizations that treat AI agents like passive tools will be blindsided by regulatory audits. Strong AI employee cybersecurity doesn’t just protect your systems, it protects your legal standing.

Regulatory Ambiguity in Autonomous Decision-Making

The global regulatory landscape is evolving slowly. The EU’s AI Act and the U.S. Executive Order on AI both attempt to define categories of risk and accountability. But there is no universal legal standard for non-human identity security or autonomous agent behavior.

For example, GDPR Article 22 prohibits fully automated decisions that produce “legal or similarly significant effects” unless specific safeguards are in place. But how does that apply when a virtual AI worker declines a customer refund or triggers a termination workflow?

The Information Commissioner’s Office (ICO) in the UK has issued guidance emphasizing that human involvement must be “real and meaningful” in AI-based decision-making. Still, enforcement mechanisms remain underdeveloped.

As AI employee cybersecurity becomes a legal matter as much as a technical one, C-suite leaders should prioritize preemptive governance frameworks. This includes defining internal accountability, documenting oversight mechanisms, and preparing for incident disclosures that involve non-human decision-makers.

How the Security Industry Is Responding (Okta and Beyond)

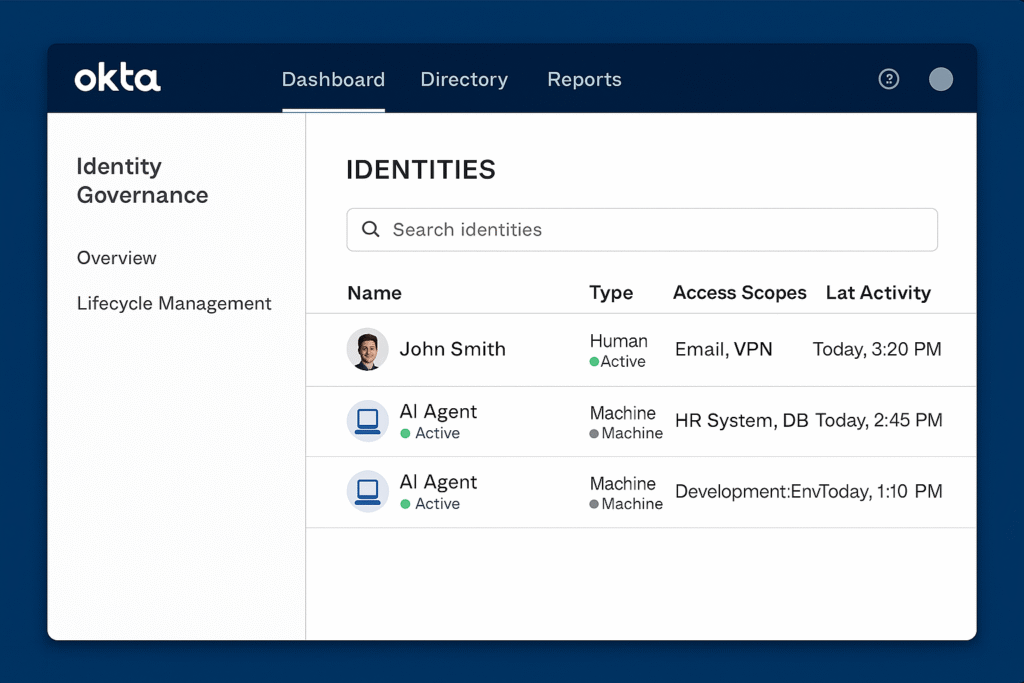

Okta’s New Features for Non-Human Identities

Recognizing the growing threat surface posed by virtual AI workers, Okta has launched a suite of identity and access management (IAM) tools tailored to non-human identities. These capabilities aim to bring machine identities into the same policy framework used for human users, but with adaptations for their continuous, autonomous behavior.

According to SecurityBrief, Okta’s new features include automated lifecycle management for service accounts, behavioral analytics tuned for machine actors, and improved visibility into token usage across applications. These updates are an early response to the rising urgency of AI employee cybersecurity in large enterprises.

These tools also help prevent credential sprawl, reduce the risk of orphaned AI accounts, and enable role-based access for AI employees operating in customer support, HR, or DevOps pipelines. For organizations accelerating enterprise AI integration, Okta’s enhancements offer a critical baseline, but they’re far from a complete solution.

Growth of AI Identity Startups

Alongside major players like Okta, a new generation of startups is emerging to address the blind spots in non-human identity security. These companies focus specifically on AI and machine agent governance, tools that monitor, restrict, and score the behavior of AI employees in real time.

For example, Entro Security has developed platforms that detect suspicious activity by virtual agents, analyze drift from expected behavior, and revoke access autonomously if risk thresholds are crossed. These tools are built on the premise that virtual AI workers need constant supervision, not just static provisioning.

Other startups are experimenting with zero-trust overlays for AI agents, treating every command or API call as potentially risky. These innovations point to a broader shift in the security industry: we are no longer just defending against external threats. We’re defending against internal automation that’s capable of acting independently and, at times, unpredictably.

This shift is also reflected in the growing ecosystem of tools featured in our analysis of AI Cyberattacks Are Exploding: Top AI Security Tools to Stop Deepfake Phishing & Reinforcement Learning Hacks in 2025. Many of these tools now include support for monitoring autonomous agents, not just malicious outsiders.

Struggles with Modeling Generative Agent Behavior

Even as new tools emerge, one of the hardest challenges remains: predicting what generative AI agents will actually do. These agents don’t follow deterministic scripts. Instead, they operate probabilistically, generating responses based on context and training, not rule-based logic. This makes behavioral modeling far more complex than with traditional bots or human users.

According to the Cloud Security Alliance, tools like the MAESTRO framework are beginning to lay the groundwork for “agentic AI threat modeling,” a discipline focused on identifying risks based on an AI agent’s goals, environment, and potential failure modes. But even MAESTRO acknowledges the limitations of existing security assumptions in a world with autonomous agents.

An AI agent that has been fine-tuned to draft legal memos may one day be prompted to summarize compliance findings. If its hallucination rate isn’t caught, it could inadvertently cause a company to misreport its internal audit. Because the action makes sense syntactically and contextually, it may go undetected until real damage is done.

As enterprise AI integration deepens, these unpredictable behaviors become not just technical nuisances, but existential risks. Companies must assume that even well-intentioned virtual AI workers can act unpredictably under the right (or wrong) conditions.

Preparing for 2026: 6 Steps to Secure Your Org Now

Audit Identity Systems for AI Readiness

The first step toward robust AI employee cybersecurity is visibility. Most organizations don’t have a complete inventory of non-human identities operating in their systems. AI agents are often introduced through SaaS tools, internal automation, or dev environments, without formal tracking.

Conduct a comprehensive audit of your identity and access management (IAM) stack. Flag all service accounts, tokens, and machine identities, and determine which are associated with virtual AI workers or LLM-powered agents. Evaluate whether each identity has role-appropriate access and whether it’s being monitored.

This is a foundational requirement for any meaningful non-human identity security program. It also lays the groundwork for understanding your organization’s exposure as enterprise AI integration expands.

Implement Sandboxing and Scoped Permissions

AI agents should not operate directly in production environments, especially in their early iterations. Sandboxing allows organizations to contain the blast radius of potential misbehavior, hallucinations, or unauthorized actions.

Define granular, scoped roles for each AI agent. Limit their access to only the data and systems required for their immediate tasks. If a virtual HR assistant doesn’t need access to salary history, restrict it. If a support bot only needs to view, not edit, tickets, apply read-only permissions.

Least-privilege access is not just a best practice for humans. It is a core component of AI employee cybersecurity. For further guidance on structuring policy, our guide on 7 AI Cybersecurity Best Practices for Good Cyber Hygiene includes actionable permissioning frameworks for both human and non-human identities.

Require Logging and Observability for All AI Activity

You can’t secure what you can’t see. AI employees must be subject to full observability, just like human users. Every action they take should be logged, timestamped, and attributable to their unique identity. These logs must integrate with your existing SIEM and incident response systems.

Logging should go beyond simple access trails. Capture output, behavior, and any dynamic changes in the agent’s prompt history or instructions. If an AI agent issues an unexpected command, you need to know what triggered it and what else was happening in context.

Observability is not just a technical function, it’s a compliance mandate for regulated industries. As regulators expand rules around enterprise AI integration, proof of oversight will become legally required.

Set Policies for Deactivation and Sunset Procedures

AI agents must not be allowed to linger once their purpose is fulfilled. Every virtual AI worker should have a defined lifecycle, including automated triggers for expiration, deprovisioning, and credential revocation.

Establish sunset policies that mirror your offboarding process for human employees. This includes:

- Automatic disabling of accounts after inactivity

- Immediate credential revocation if the AI’s sponsoring team is restructured

- Scheduled reviews of purpose and effectiveness

Failure to do so leads directly to shadow AI accounts. This is a leading cause of unmanaged access risk in organizations using intelligent automation.

Monitor for Agent Drift and Command Chaining

Agent drift occurs when an AI employee’s behavior changes over time due to feedback loops, model fine-tuning, or emergent behavior. This can be as subtle as prioritizing different language in communications or as dangerous as issuing commands not present in the original prompt.

Command chaining happens when an agent strings together multiple autonomous actions that weren’t explicitly approved. This is especially risky in systems with broad integrations (e.g., where an AI support bot can trigger a refund, notify billing, and email the customer, all without human approval).

Deploy anomaly detection, behavior scoring, and usage thresholds to monitor for drift or chaining. Make use of open-source or commercial tools designed for LLM observability.

Use Red Teams to Test and Stress AI Security

Your AI employee cybersecurity defenses are only as good as the assumptions they’re based on. Red team exercises involving AI agents can help validate (or disprove) those assumptions. Simulate attacks that exploit hallucinations, excessive permissions, or command chaining vulnerabilities.

Some companies are already using prompt injection, adversarial examples, and agent role-swapping as red team tools. The goal isn’t just to “break the AI,” it’s to understand how it might break the organization unintentionally.

Include stakeholders from security, engineering, legal, and compliance in these exercises. This isn’t just a technical issue, it’s a strategic one.

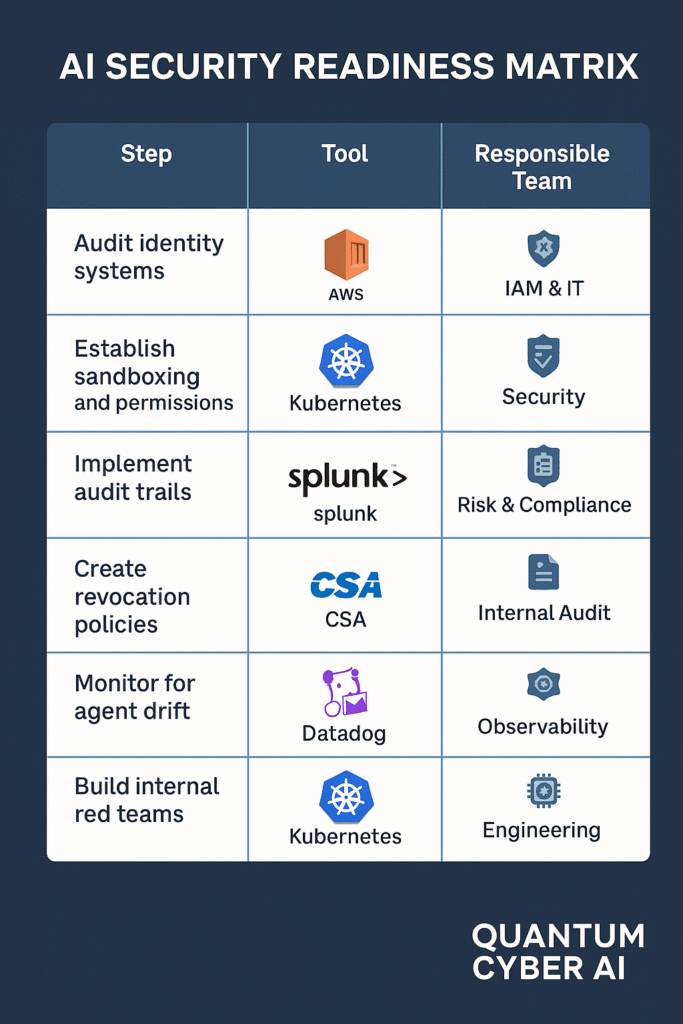

AI Security Readiness Matrix

| Step | Example Tool(s) | Responsible Team |

|---|---|---|

| Identity Audit | Okta, SailPoint | Security / IAM Ops |

| Sandboxing & Scoped Access | Kubernetes RBAC, AWS IAM | DevSecOps / Engineering |

| Logging & Observability | Splunk, Datadog, Snyk | IT / Security Analytics |

| Deactivation Protocols | Jira, ITSM, HRIS | IT Operations |

| Drift / Chaining Detection | Entro, OpenLLM tools | AppSec / ML Engineering |

| Red Team Simulation | Custom frameworks | Security / Compliance |

Conclusion: The Future of Cybersecurity Isn’t Human-Only Anymore

AI employees are no longer a distant concept. According to Anthropic AI, enterprise-ready virtual AI workers could be operating across internal networks by 2026. These aren’t chatbots, they are fully credentialed digital employees capable of executing tasks, making decisions, and interacting with sensitive systems. And unlike their human counterparts, they don’t clock out.

Despite their looming arrival, most organizations are still operating under outdated assumptions: that “users” are human, that automation follows deterministic logic, and that access controls built for people will be sufficient for machines. The reality is that AI employee cybersecurity presents a wholly new challenge, one that blends identity governance, compliance, behavior modeling, and incident response into a single, fast-evolving domain.

The current gaps in non-human identity security, from shadow accounts to audit blind spots, mirror the problems that plagued human IAM 10 years ago. But with AI, the risks scale faster and the damage potential is greater. A single hallucinated command or drifted behavior pattern could trigger cascading failures across systems that are too interconnected for traditional containment.

Fortunately, the industry is beginning to respond. Companies like Okta are launching new identity governance solutions tailored to machine users. Startups like Entro Security are building behavior detection systems for LLM-based agents. But these tools are still early-stage. To keep pace, CISOs and IT leaders must act now: by auditing identity systems, restricting agent permissions, building observability, and simulating red team attacks.

We cover stories like this in our newsletter, subscribe here to get weekly breakdowns of emerging AI threats, regulatory shifts, and tools to stay ahead.

As your organization begins adopting generative AI tools or experimenting with virtual agents, the mindset must shift from “experimental tech” to “operational risk.” These agents aren’t just software. They’re coworkers, and you’ll need the cybersecurity policies to match.

For actionable solutions to secure your AI stack today, don’t miss our breakdown of the Top 5 Breakthrough AI-Powered Cybersecurity Tools Protecting Businesses in 2025.

Key Takeaways

- Anthropic anticipates enterprise AI employees by 2026.

- AI employees differ from bots, they have memory, credentials, and autonomy.

- Hallucinations and system access create major operational risks.

- IAM systems are largely unprepared for non-human identity governance.

- Legal responsibility and compliance frameworks aren’t ready for autonomous agents.

- Okta and startups are beginning to address these gaps, but coverage is thin.

- Enterprises must take proactive steps today to prepare for tomorrow’s AI coworkers.

FAQ

Q1: What is an AI employee?

A fully autonomous software agent with scoped responsibilities, memory, and system access, treated like a digital employee within corporate systems.

Q2: How is an AI employee different from a chatbot?

AI employees can initiate and complete tasks without prompting, retain context, and access multiple systems autonomously, unlike reactive chatbots.

Q3: What are the top security risks of AI employees?

Privileged access misuse, hallucinated actions, identity sprawl, lack of audit trails, and poor accountability frameworks.

Q4: Can AI employees be held legally accountable?

No. Responsibility falls to the organization or developer. But current regulations offer little clarity on how this should be enforced.

Q5: Are there tools to manage non-human identities today?

Yes. Okta, Entro Security, and other platforms are rolling out early-stage solutions specifically for machine identity governance.